Note: As of 2023, the more common terminology is now “Frame Generation” instead of “Frame Rate Amplification Technologies”. Also, there is another newer supplemental Frame Generation Basics article too, go check it out!

More Frame Rate With Better Graphics!

On April 4th 2019, Oculus launched Asynchronous Space Warp 2.0, which is a newer method of raising frame rate, by laglessly converting 45 frames per second to 90 frames per second for Oculus Rift Virtual Reality headsets, which is perfect timing for this Blur Busters featured article.

In the future, instead of low-detail 240fps, one can amplify high-detail 60fps into high-detail 240fps, allowing you to have cake and eat it too on your gaming monitor!

Frame Rate Amplification Technologies (FRAT)

Increasing frame rates with less GPU horsepower, is a huge area of innovation at the moment. High-Hz displays hugely benefit from Frame Rate Amplification Technologies (FRAT) – our umbrella term that describes technologies to increase frame rates with less GPU horsepower per frame. This can also include gaming monitors, and not just virtual reality headsets.

Here is a list of publicly known frame rate amplification technologies:

- Oculus Asynchronous Space Warp

- Temporal Resolution Multiplexing (new paper)

- NVIDIA Deep Learning Super Sampling (DLSS)

- Classic Interpolation

These are just only the publicly released technologies! This article is derived from a forum thread that I originally publicly posted in August 2017, but updated with new FRAT technologies publicly announced!

Technology: Oculus Asynchronous Space Warp

The Oculus Rift VR headset included a method of reprojecting 45 frames per second to 90 frames per second, thanks to Oculus Asynchronous Space Warp (ASW). Keeping frame rates high in virtual reality is a critical mother of necessity, because stutters can cause headaches and nausea in virtual reality.

Oculus’ release of Version 2.0 of Asynchronous Space Warp is currently one of the better lagless & artifactless implementations of Frame Rate Amplification Technology (FRAT).

- For those not currently aware, see How Blur Busters Convinced Oculus Rift To Go Low Persistence for some newly revealed Blur Busters history in accelerating low persistence in VR.

- There are many similar equivalent reprojection techniques to Oculus ASW at competing virtual reality headset vendors including PIMAX BrainWarp and HTC Vive Motion Smoothing.

- Related articles on Asynchronous Time Warp: Road To VR, and Upload VR

Technology: Temporal Resolution Multiplexing

A new paper by University of Cambridge has revealed a brand new frame rate amplification technology, for IEEE VR 2019 by co-authors Gyorgy Denes, Kuba Maruszczyk, George Ash, and Rafał K. Mantiuk.

Basically, the technique is to create low-resolution frames between high-resolution frames, and then upconvert the low resolution frames using the information from the high-resolution frames.

- There is also a large PDF paper on this algorithm, as well as supplementary material;

- This FRAT can lower frame transmission bandwidth over video cables (or video streaming), and offload this algorithm to the display side.

- This FRAT can also potentially also be useful for streaming full power virtual reality from powerful tower computer to low-powered wireless VR headsets (such as Oculus GO).

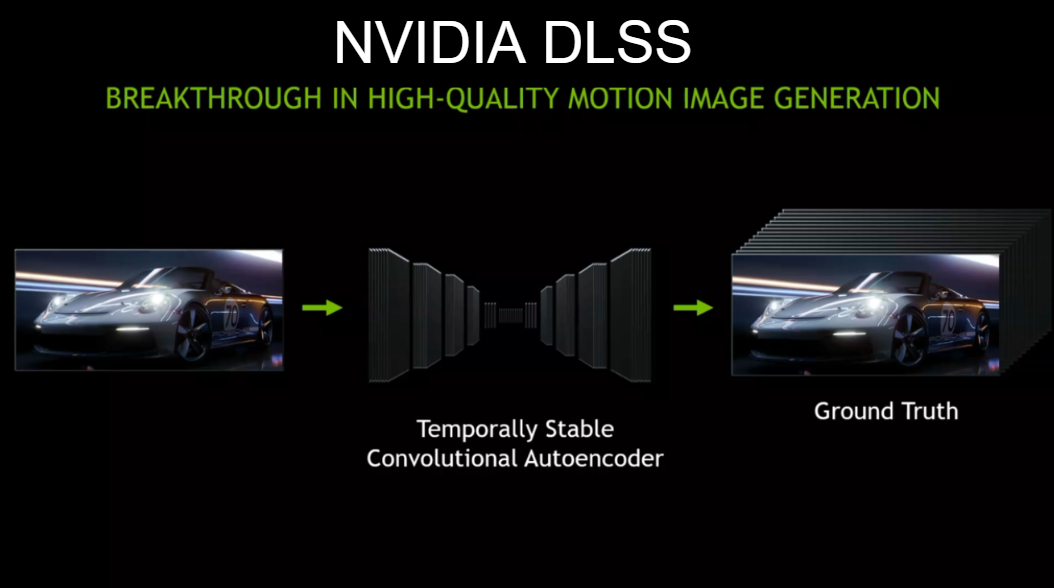

Technology: NVIDIA Deep Learning Super Sampling (DLSS)

NVIDIA released Deep Learning Super Sampling (DLSS) when they launched GeForce RTX.

While not yet as perfectly artifactless as Oculus Asynchronous Space Warp 2.0, it is a great first implementation from NVIDIA, and can only improve from here. Currently, it does not yet provide major frame rate amplification ratios (like 2:1 or 5:1 frame rate increases).

- This is not a “between-frames” Frame Rate Amplification Technology (FRAT) like the other technologies. All frames are rendered lower resolution and then upconverted using AI.

- It includes many FRAT attributes of other existing techniques such as artificial intelligence (including AI Interpolation) and lower resolution frames (like Temporal Resolution Multiplexing).

Technology: Classic Interpolation

Since the beginning of this century, many HDTVs had interpolation to convert 60 frames per second to 120 frames per second. Historically, interpolation was very high latency with very bad artifacts, and unsuitable for gaming. Recently, this is changing with newer more modern AI interpolation in new TVs.

A popular television testing website, RTINGS, who uses Blur Busters testing techniques, has an excellent video about classic interpolation technologies:

- There is a huge number of scientific papers on motion interpolation, and older 1980s papers on interpolation for television format conversion (between PAL 50Hz and NTSC 60Hz).

- More recently, artificial intelligence interpolation now in some new televisions can smartly fix interpolation artifacts and do more accurate guesses of the “fake frames” between real frames,

- To reduce lag, the 2018+ Samsung televisions now has low-lag Game Motion Plus interpolation mode for gaming consoles, to amplify frame rates from 30fps-60fps into 120fps!

- A popular PC application for amplifying frame rates of video files, is Smooth Video Project.

Although classic interpolation is the oldest frame rate amplification technology dating back twenty years, innovations continue in improving video motion interpolation.

Are They “Fake Frames”?

Not necessarily anymore! In the past, classic interpolation added very fake-looking frames in between real frames.

However, some newer modern frame rate amplification technologies (such as Oculus ASW 2.0) are less black-box and more intelligent. New frame rate amplification technologies use high-frequency extra data (e.g. 1000 Hz head trackers, 1000Hz mouse movements) as well as additional information (texture memory, Z-Buffer memory) in order to create increasingly accurate intermediate 3D frames with less horsepower than a full GPU render from scratch. So don’t call them “Fake Frames” anymore, please!

Many film makers and content creators hate interpolation as they don’t have control over a TV’s default setting. However, game makers are able to intentionally integrate it and make it as perfect as possible (e.g. intentionally providing data such as a depth buffer and other information to eliminate artifacts).

Video Compression Equivalent Of FRAT: Predicted Frames

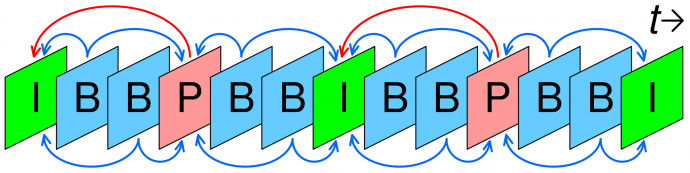

When you are watching video and films, using Netflix or YouTube or at digital movie theaters, you’re already watching material that often contains approximately 1 or 2 non-predicted frames per second, with very accurate predictive frames inserted in between them.

Modern digital video and films use various video compression standards like MPEG2, MPEG4, H.264, H.265, etc. to generate the full video and movie frame rates (e.g. 24fps, 30fps, 60fps, etc) by using very few full frames and filling the rest with predicted frames! This is a feature of video compression standards to insert “fake frames” between real frames, and these “fake frames” are now almost perfectly accurate, that they might as well be real frames.

- Full Frames:

Video including Netflix, YouTube, Blu-Ray and digital cinema are often only barely more than 1 full frame per second via I-Frames - Predicted Frames:

The remainder of video frames are “faked” by predictive techniques via P-Frames and B-Frames. Yes, that even includes the frames at the digital projector in your local movie theater!

3D Rendering Is Slowly Heading Towards Similar Metaphor!

Towards year 2030, we anticipate that the GPU rendering pipeline will slowly evolve to include multiple Frame Rate Amplification Technology (FRAT) solutions where some frames are fully rendered, and the intermediate frames are generated using a frame rate amplification technology. It is possible to make this perceptually lossless with less processing power than full frame rate. Some FRAT systems incorporate video memory information (e.g. Z-Buffers, textures, raytracing history) as well as eliminating position guessing (e.g. 1000Hz mouse, 1000Hz headtrackers) to make frame rate amplification visually lossless.

Instead of rendering at low-detail at 240fps, one can render at high-detail at 60fps and use frame rate amplification technology (FRAT) to convert 60fps to 240fps. With newer perceptually-lossless FRAT technologies, it is possible to instead get high-detail at 240fps, having cake and eating it too!

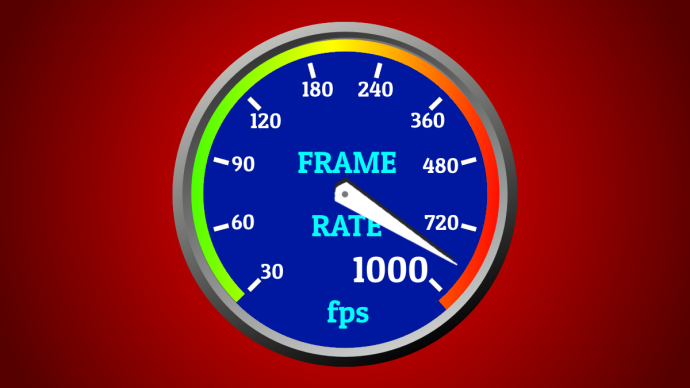

Large-Ratio Frame Rate Amplifications of 5:1 and 10:1

The higher the frame rate, the briefer individual frames are displayed for, and the less critical imperfections in some interframes become. We envision large-ratio 5:1 or 10:1 frame rate amplification to be practical for converting 100fps to 1000fps in a perceptually lagless and lossless way.

This is Key Basic Technology For Future 1000 Hz Displays

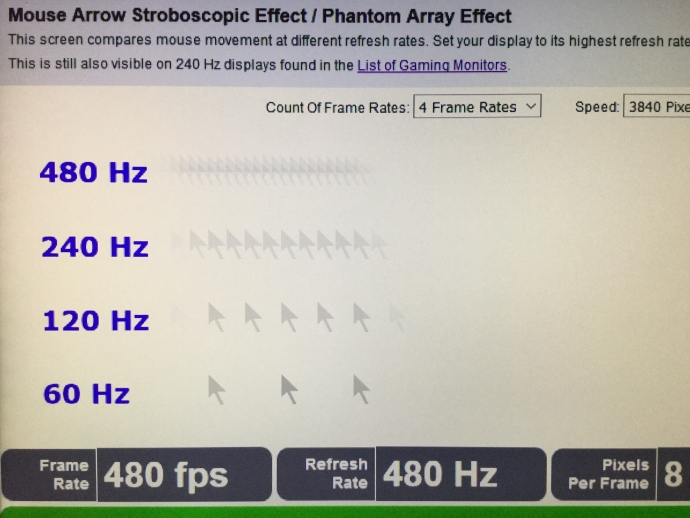

We wrote a now-famous article about the Journey to 1000Hz Displays. Recent research shows that 1000Hz displays has human visible benefits provided that source material (graphics, video) is properly designed and properly presented to such a display at ultra high resolutions. Many new discoveries of the benefits of ultra-high-Hz displays have been discovered through additional research now that such experimental displays exist. Here is a photograph of a real 480Hz display:

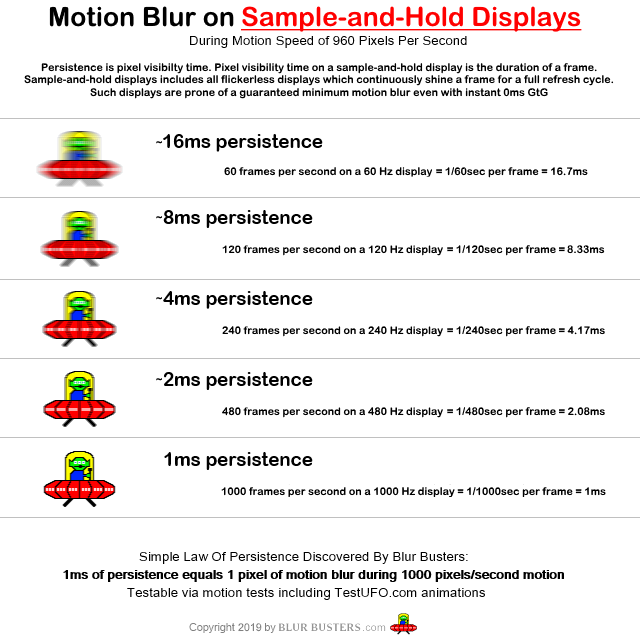

Ultra-high-Hz also behaves like a strobeless method of motion blur reduction (blurless sample-and-hold), as twice the frame rate and refresh rate halves display motion blur on a sample-and-hold display.

Frame Rate Amplification Can Make Strobe-Based Motion Blur Reduction Unnecessary

Our namesake, Blur Busters, was born because of display motion blur reduction technology that is found in many gaming monitors today (including LightBoost, ULMB, etc). These use impulsing techniques (backlight strobing, frame flashing, black frame insertion, phosphor decay, or other impulsing technique).

With current low-persistence VR headsets, you can see stroboscopic artifacts when moving head super very fast (or rolling eyes around) on high-contrast scenes. Ultra-Hz fixes this.

Making Display Motion More Perfectly Identical To Real-Life

A small but not-insignificant percentage of humans cannot use VR headsets, and cannot use gaming-monitor blur-reduction modes (ULMB, LightBoost, DyAc, etc) due to an extreme flicker sensitivity.

Accommodating a five-sigma population can never be accomplished via strobed low-persistence. Ultra-high-framerate sample-and-hold displays makes possible blurless motion in a strobeless fashion, in a way practically indistinguishable from real life analog motion.

The only way to achieve perfect motion clarity (equivalent to a CRT) on a completely flickerless display, is to display sharp, low-persistence frames consecutively with no black periods in between. Achieveing 1ms persistence on a sample-and-hold display, requires 1000 frames per second on a 1000 Hertz display.

Frame Rate Amplification Technologies May Include Co-GPU Built Into Displays

Several public posts suggests this idea. In the far future, it is possible that frame rate amplification technology may end up as a co-GPU that is built into future displays, to reduce frame transmission bandwidth (e.g. 4K 1000Hz or 8K 1000Hz), in order to convert unobtainium into the possible.

Future co-GPUs in displays may also decode 3D geometry based framerateless video formats. A futurist view is that video may someday eventually be compressed via 3D-geometry rather than traditional macro-block based compression.

A far-future “H.268” or “H.269” 3D geometry codec. This may allow UltraHFR with crystal-sharp real-time 1000fps imagery, as traditional video is softer than computer graphics. Ultra-high-Hz displays require ultra-sharp sources for maximal visible human benefits.

More Reading

Fascinated about ultra high frame rates on ultra high refresh rates?

Read more in Blur Busters Area 51 Display Science, Research and Engineering.

- Forum Discussion — Frame Rate Amplification Technology (2017)

- Pixel Response — GTG versus MPRT: Frequently Asked Questions

- 480Hz Displays — World’s First 480Hz Monitor Review

- 1000Hz Displays — Blur Busters Law: The Amazing Journey To Future 1000Hz Displays

- 1000fps Real-Time Video — UltraHFR Video: Real-Time Ultra-High Frame Rate Video

- Stroboscopic Side Effects — Stroboscopic Effect of Finite Frame Rate Displays