G-SYNC Module

The module exploits the vertical blanking interval (the span between the previous and next frame scan) to manipulate the display’s internal timings; performing G2G (gray to gray) overdrive calculations to prevent ghosting, and synchronizing the display’s refresh rate to the GPU’s render rate to eliminate tearing, along with the delayed frame delivery and adjoining stutter caused by traditional syncing methods.

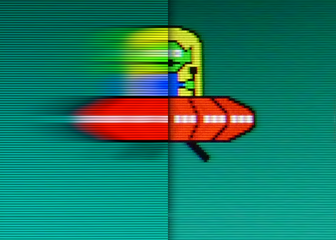

G-SYNC Demo

The below Blur Busters Test UFO motion test pattern uses motion interpolation techniques to simulate the seamless framerate transitions G-SYNC provides within the refresh rate, when directly compared to standalone V-SYNC.

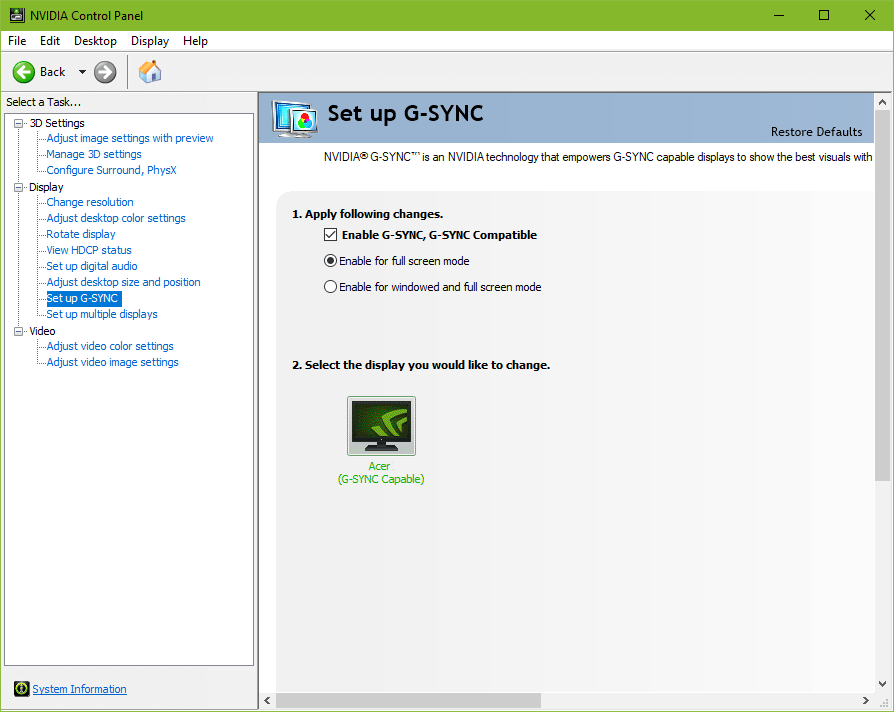

G-SYNC Activation

“Enable for full screen mode” (exclusive fullscreen functionality only) will automatically engage when a supported display is connected to the GPU. If G-SYNC behavior is suspect or non-functioning, untick the “Enable G-SYNC, G-SYNC Compatible” box, apply, re-tick, and apply.

G-SYNC Windowed Mode

“Enable for windowed and full screen mode” allows G-SYNC support for windowed and borderless windowed mode. This option was introduced in a 2015 driver update, and by manipulating the DWM (Desktop Windows Manager) framebuffer, enables G-SYNC’s VRR (variable refresh rate) to synchronize to the focused window’s render rate; unfocused windows remain at the desktop’s fixed refresh rate until focused on.

G-SYNC only functions on one window at a time, and thus any unfocused window that contains moving content will appear to stutter or slow down, a reason why a variety of non-gaming applications (popular web browsers among them) include predefined Nvidia profiles that disable G-SYNC support.

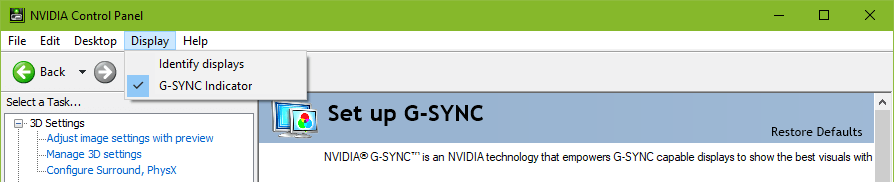

Note: this setting may require a game or system restart after application; the “G-SYNC Indicator” (Nvidia Control Panel > Display > G-SYNC Indicator) can be enabled to verify it is working as intended.

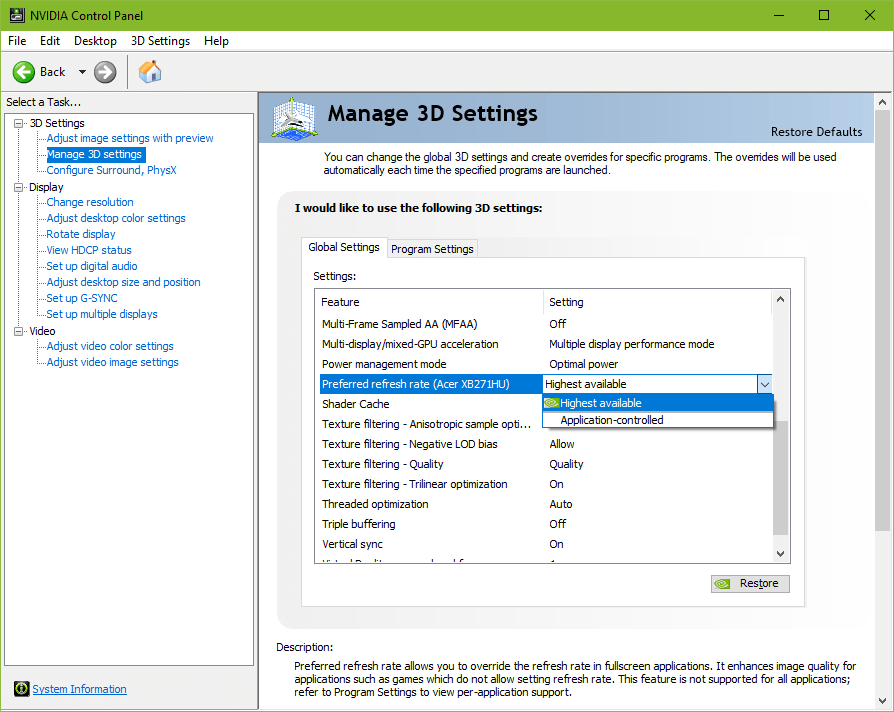

G-SYNC Preferred Refresh Rate

“Highest available” automatically engages when G-SYNC is enabled, and overrides the in-game refresh rate selector (if present), defaulting to the highest supported refresh rate of the display. This is useful for games that don’t include a selector, and ensures the display’s native refresh rate is utilized.

“Application-controlled” adheres to the desktop’s current refresh rate, or defers control to games that contain a refresh rate selector.

Note: this setting only applies to games being run in exclusive fullscreen mode. For games being run in borderless or windowed mode, the desktop dictates the refresh rate.

G-SYNC & V-SYNC

G-SYNC (GPU Synchronization) works on the same principle as double buffer V-SYNC; buffer A begins to render frame A, and upon completion, scans it to the display. Meanwhile, as buffer A finishes scanning its first frame, buffer B begins to render frame B, and upon completion, scans it to the display, repeat.

The primary difference between G-SYNC and V-SYNC is the method in which rendered frames are synchronized. With V-SYNC, the GPU’s render rate is synchronized to the fixed refresh rate of the display. With G-SYNC, the display’s VRR (variable refresh rate) is synchronized to the GPU’s render rate.

Upon its release, G-SYNC’s ability to fall back on fixed refresh rate V-SYNC behavior when exceeding the maximum refresh rate of the display was built-in and non-optional. A 2015 driver update later exposed the option.

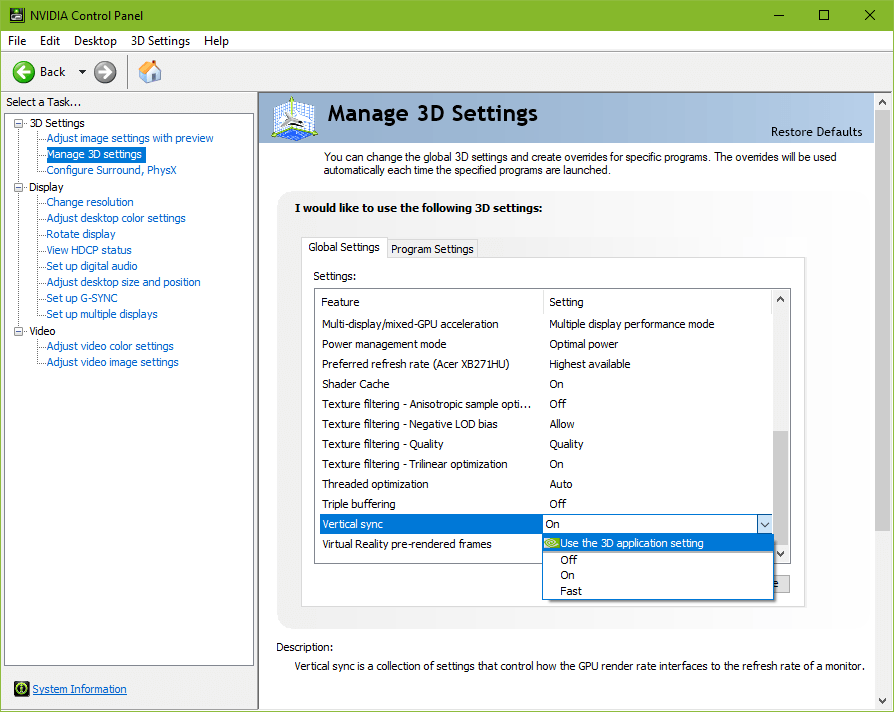

This update led to recurring confusion, creating a misconception that G-SYNC and V-SYNC are entirely separate options. However, with G-SYNC enabled, the “Vertical sync” option in the control panel no longer acts as V-SYNC, and actually dictates whether, one, the G-SYNC module compensates for frametime variances output by the system (which prevents tearing at all times. G-SYNC + V-SYNC “Off” disables this behavior; see G-SYNC 101: Range), and two, whether G-SYNC falls back on fixed refresh rate V-SYNC behavior; if V-SYNC is “On,” G-SYNC will revert to V-SYNC behavior above its range, if V-SYNC is “Off,” G-SYNC will disable above its range, and tearing will begin display wide.

Within its range, G-SYNC is the only syncing method active, no matter the V-SYNC “On” or “Off” setting.

Currently, when G-SYNC is enabled, the control panel’s “Vertical sync” entry is automatically engaged to “Use the 3D application setting,” which defers V-SYNC fallback behavior and frametime compensation control to the in-game V-SYNC option. This can be manually overridden by changing the “Vertical sync” entry in the control panel to “Off,” “On,” or “Fast.”