Closer to the Source*

*As of Nvidia driver version 441.87, Nvidia has made an official framerate limiting method available in the NVCP; labeled “Max Frame Rate,” it is a CPU-level FPS limiter, and as such, is comparable to the RTSS framerate limiter in both frametime performance and added delay. The Nvidia framerate limiting solutions tested below are legacy, and their results do not apply to the “Max Frame Rate” limiter.

Up until this point, an in-game framerate limiter has been used exclusively to test FPS-limited scenarios. However, in-game framerate limiters aren’t available in every game, and while they aren’t required for games where the framerate can’t meet or exceed the maximum refresh rate, if the system can sustain the framerate above the refresh rate, and a said option isn’t present, an external framerate limiter must be used to prevent V-SYNC-level input lag instead.

In-game framerate limiters, being at the game’s engine-level, are almost always free of additional latency, as they can regulate frames at the source. External framerate limiters, on the other hand, must intercept frames further down the rendering chain, which can result in delayed frame delivery and additional input latency; how much depends on the limiter and its implementation.

RTSS is a CPU-level FPS limiter, which is the closest an external method can get to the engine-level of an in-game limiter. In my initial input lag tests on my original thread, RTSS appeared to introduce no additional delay when used with G-SYNC. However, it was later discovered disabling CS:GO’s “Multicore Rendering” setting, which runs the game on a single CPU-core, caused the discrepancy, and once enabled, RTSS introduced the expected 1 frame of delay.

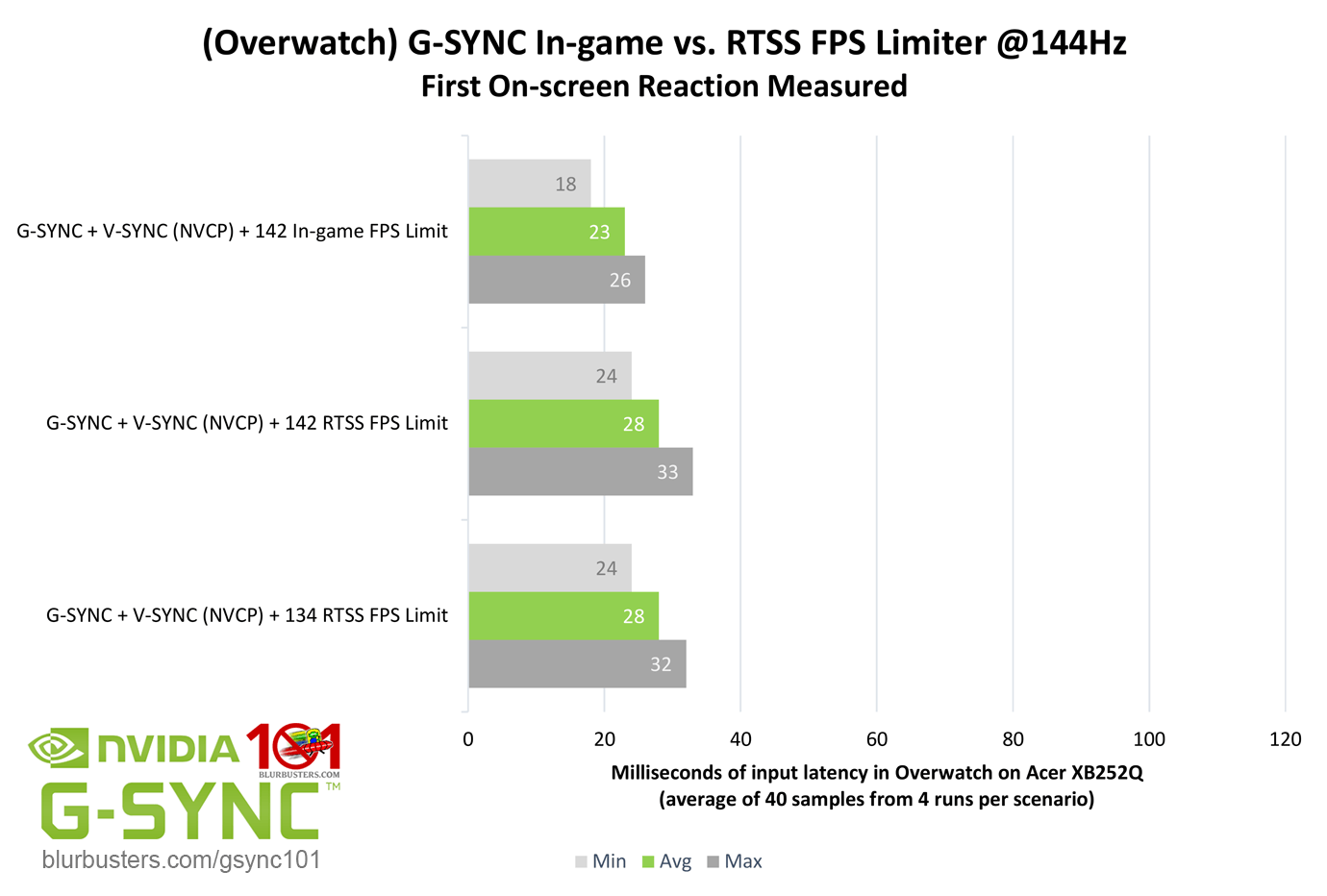

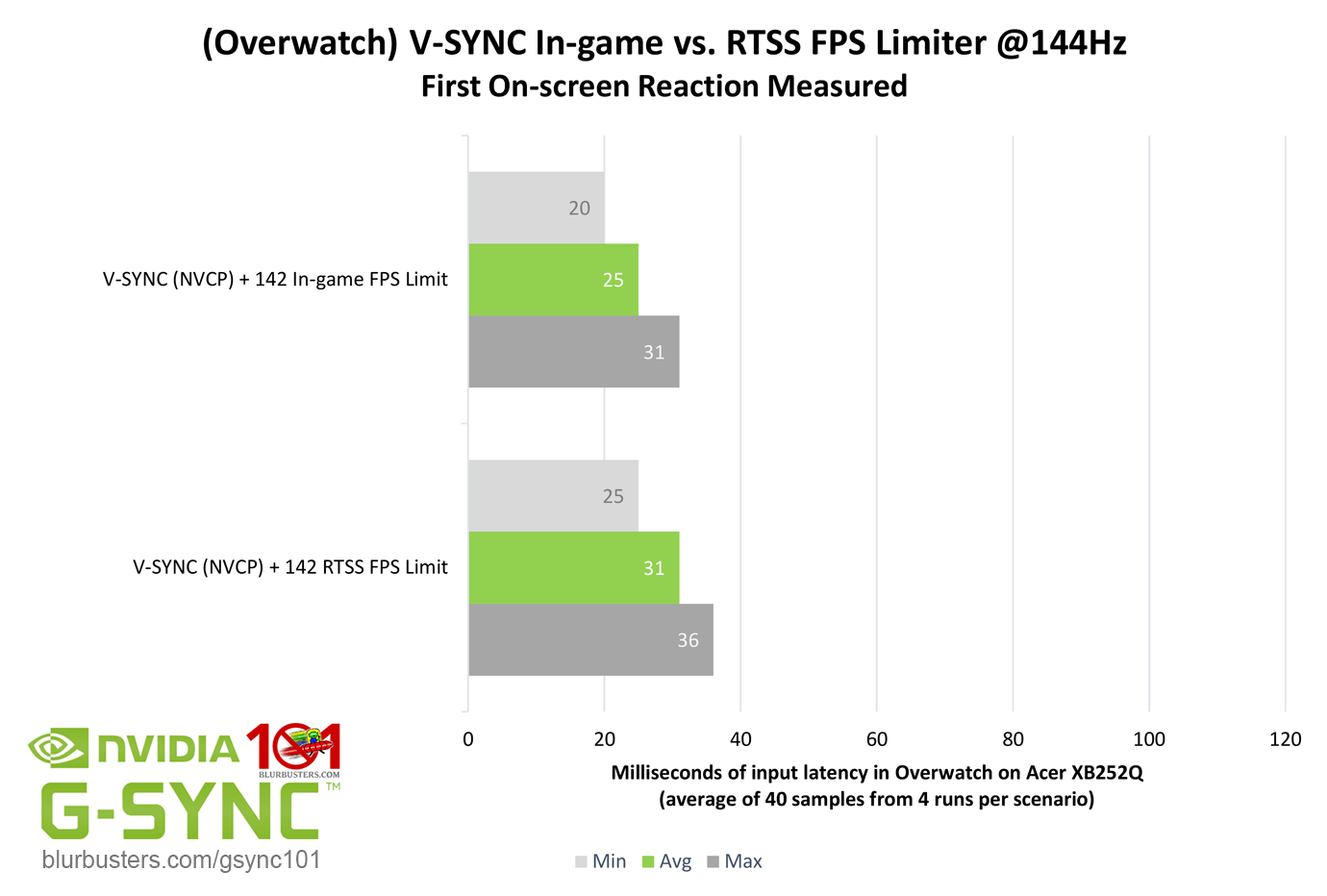

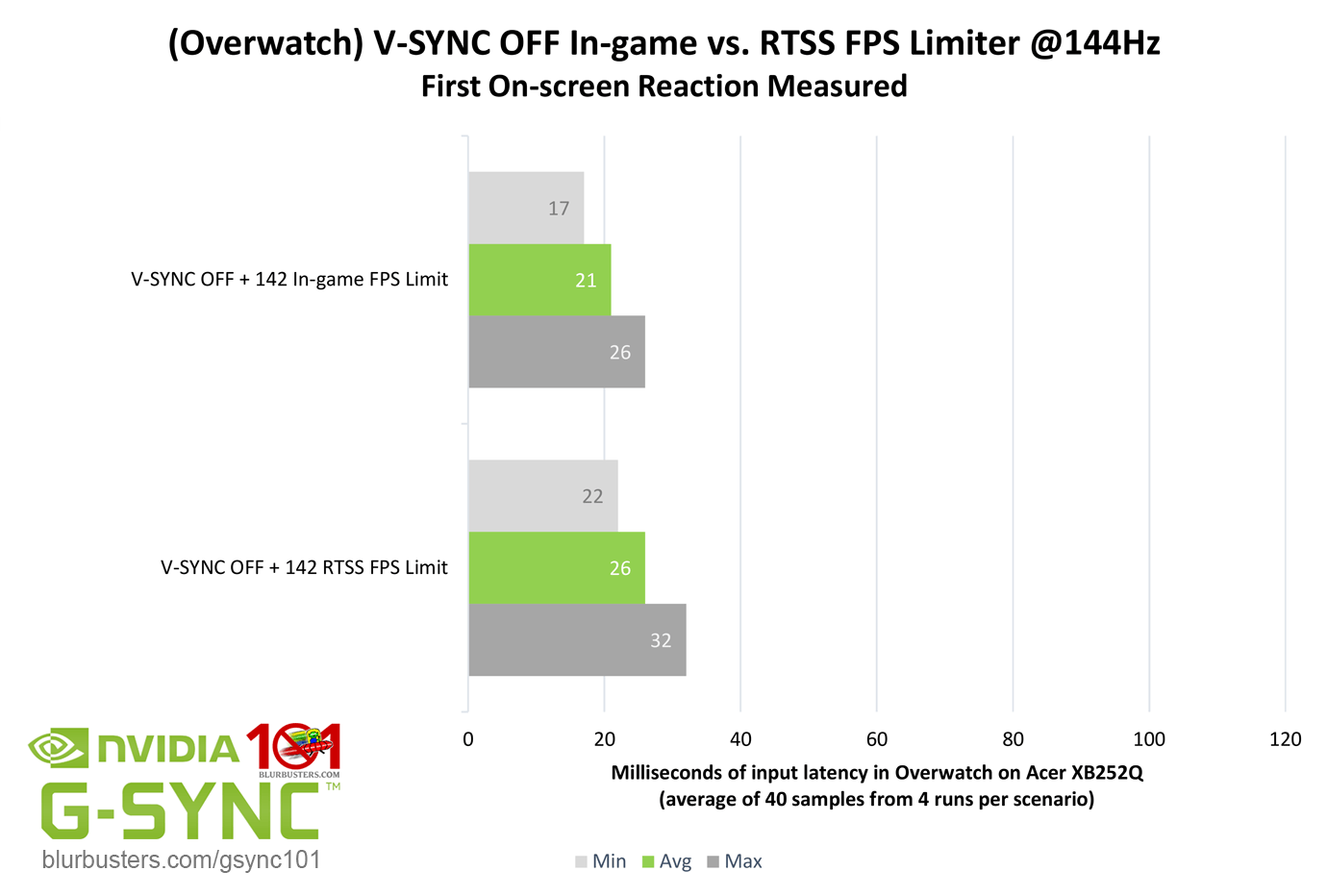

Seeing as the CS:GO still uses DX9, and is a native single-core performer, I opted to test the more modern “Overwatch” this time around, which uses DX11, and features native multi-threaded/multi-core support. Will RTSS behave the same way in a native multi-core game?

Yes, RTSS still introduces up to 1 frame of delay, regardless of the syncing method, or lack thereof, used. To prove that a -2 FPS limit was enough to avoid the G-SYNC ceiling, a -10 FPS limit was tested with no improvement. The V-SYNC scenario also shows RTSS delay stacks with other types of delay, retaining the FPS-limited V-SYNC’s 1/2 to 1 frame of accumulative delay.

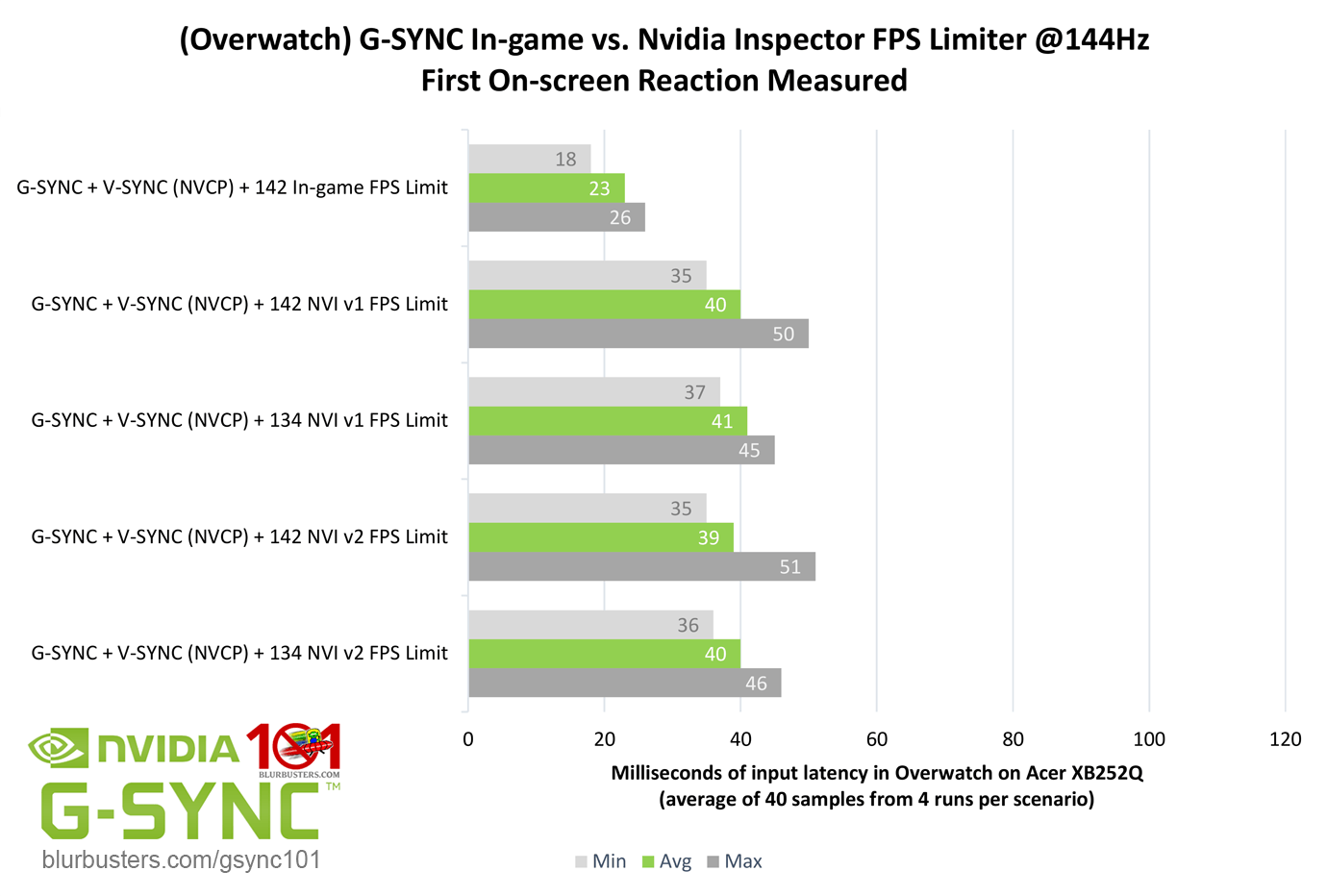

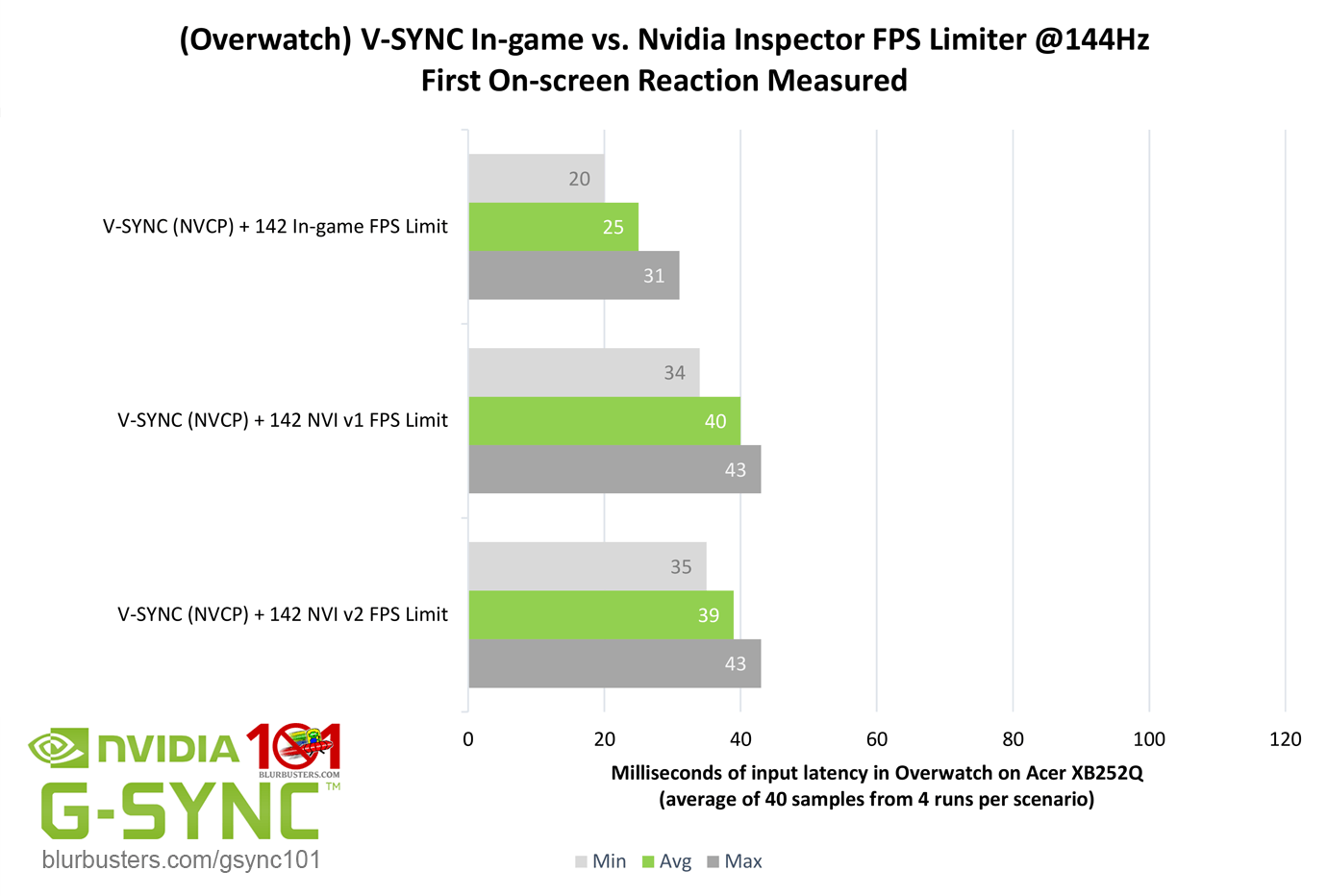

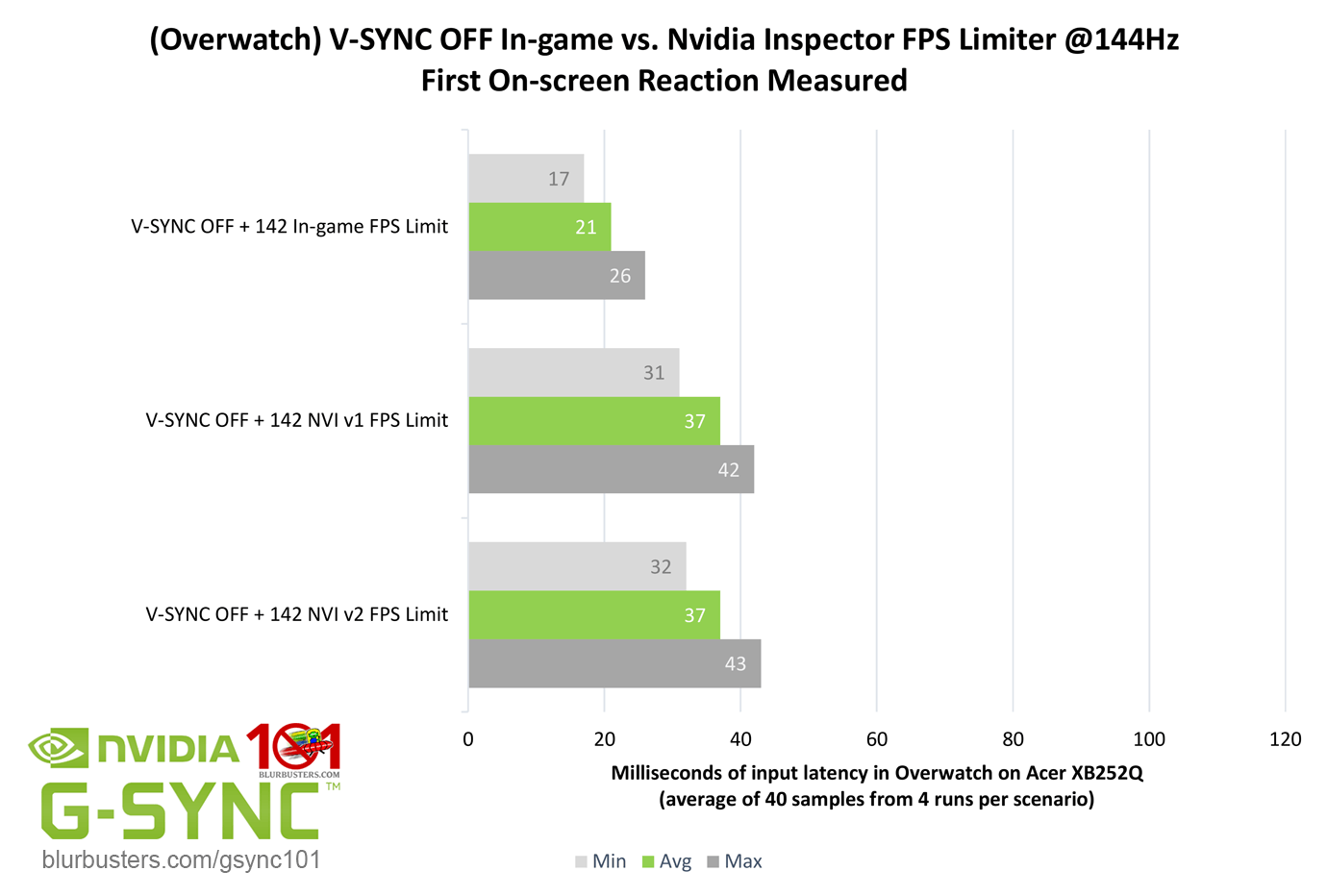

Next up is Nvidia’s FPS limiter, which can be accessed via the third-party “Nvidia Inspector.” Unlike RTSS, it is a driver-level limiter, one further step removed from engine-level. My original tests showed the Nvidia limiter introduced 2 frames of delay across V-SYNC OFF, V-SYNC, and G-SYNC scenarios.

Yet again, the results for V-SYNC and V-SYNC OFF (“Use the 3D application setting” + in-game V-SYNC disabled) show standard, out-of-the-box usage of both Nvidia’s v1 and v2 FPS limiter introduce the expected 2 frames of delay. The limiter’s impact on G-SYNC appears to be particularly unforgiving, with a 2 to 3 1/2 frame delay due to an increase in maximums at -2 FPS compared to -10 FPS, meaning -2 FPS with this limiter may not be enough to keep it below the G-SYNC ceiling at all times, and it might be worsened by the Nvidia limiter’s own frame pacing behavior’s effect on G-SYNC functionality.

Needless to say, even if an in-game framerate limiter isn’t available, RTSS only introduces up to 1 frame of delay, which is still preferable to the 2+ frame delay added by Nvidia’s limiter with G-SYNC enabled, and a far superior alternative to the 2-6 frame delay added by uncapped G-SYNC.