![razer-deathadder-gallery-3[1]](/wp-content/uploads/2014/02/razer-deathadder-gallery-31-300x225.png)

Polling Rate

What is Polling Rate?

The communication between a mouse and computer is not instantaneous or constant. USB mice communicate with the computer in set intervals of time. Polling rate is typically measured in polls per second also known as hertz. The default polling rate for most USB mice is 125 Hz (hertz).

Faster is Better

![17697[1]](/wp-content/uploads/2014/02/176971-273x300.png)

Most new gaming mice now have a 500 Hz and 1000 Hz setting. If you are using 1000 Hz polling the added delay is only 1 millisecond. (It is important to note that this is only the mouse latency, not total input lag).

More Noticeable at Higher Frame Rates

Polling rate becomes increasingly important with higher frame rate displays. If you’re using a standard 60 Hz display and 125h Hz mouse polling the delay between frames would be 16.7 ms and the delay between mouse updates would be 8 ms. The delay between mouse updates is less apparent because the delay between frames is so high. Using a 120 Hz refresh rate and 125 Hz mouse polling brings the frame rate interval down to 8.3 ms which is almost as frequent as the mouse updates.

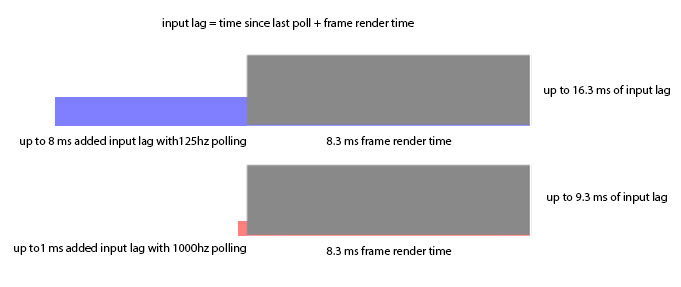

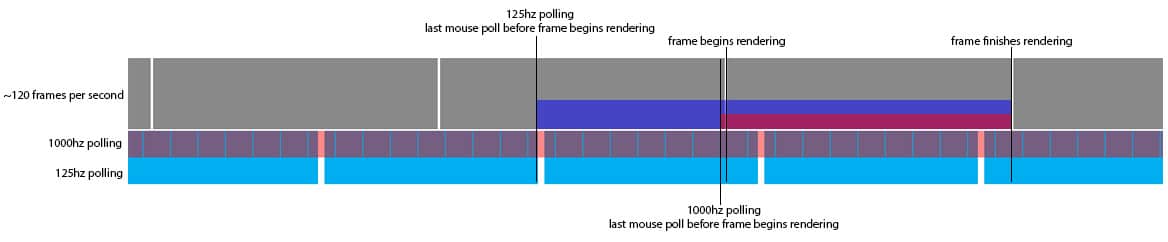

Here is a simple example of input lag during 120 frames per second (1/120sec = 8.3ms) being compared between a 125Hz mouse and a 1000Hz mouse:

Note: This simplified diagram excludes other parts of the whole input lag chain, such game engine latency and display latency.

As you increase your frame rate, the frequency of mouse updates per frame goes down and the delay between when you move your mouse and the effect of it on screen becomes more apparent. If you play at a higher frame rate than your mouse polling rate some frames will be updating with no new mouse input. At this point, you’re basically just viewing some frames instead of actively participating in them, and the mouse movement will be very choppy.

Some games actually update the game state at a rate faster than the video frame rate to reduce input lag. These games take advantage of higher polling rates even with a lower frame rate. For example, a game could update the physical interactions between objects based on player input at 500 Hz despite only running at a 60 Hz video frame rate. In games like these a faster polling rate reduces mouse input lag even more.

Which Polling Rate Should I Use?

To decrease input lag you can increase the USB polling rate of the mouse. Many modern gaming mice support polling rates up to 1000 Hz which drastically reduces mouse lag in comparison with 125 Hz. Most gaming mice have adjustable polling through software or switches built into the hardware itself. For mice that don’t have a polling rate setting (e.g. Logitech MX518) you can often manually overclock them to a higher poll rate.

In addition, if you are using Windows 8.1, there is a system-wide 1000Hz mouse fix which serious gamers should install to bring maximum mouse performance to all games and Windows applications.

VSYNC

What Does VSYNC Do?

VSYNC synchronizes the games frame rate with the displays frame rate to reduce tearing and stuttering. As long as the game updates as fast as the monitor’s refresh rate there will be no tearing or stuttering. Without VSYNC a game will update as fast as it possible can send updates to the monitor. This can lead to tearing (see TestUFO animation) if the frame rate is faster than the monitor’s refresh rate. It also can cause stuttering because the the time the frame was created to the time it is displayed is constantly changing.

Input Lag

VSYNC can increase input lag because while using VSYNC the game will take a mouse measurement, render the frame, then wait to display that frame until the next monitor refresh. When VSYNC is off the game will constantly update with new frames without waiting for the display so the input will be fresher with less delay.

Low Persistence Displays (LightBoost)

During LightBoost, mouse movement can be more fluid with VSYNC ON when running at full frame rate without frame drops. However, it is also creates added input lag.

If you’re playing a competitive game like Counter-Strike, it is typically better to use VSYNC OFF, as less input lag is more important than maximum fluidity. On the other hand, single-player games like BioShock Infinite can be more enjoyable with the fluidity provided by VSYNC, assuming you have enough GPU power to keep frame rates maxed out, with frame rates matching refresh rates.

Some games have built in frame rate limiters that behave similar to VSYNC ON, but instead of rendering the frame right away the frame is rendered at the last possible moment to reduce input lag. It does not work perfectly, but it is a good compromise between the fluidity of full frame rate VSYNC ON (120fps at 120Hz), and tearing of uncapped frame rates during VSYNC OFF. One example is the fps_max setting found in Source Engine games (e.g. Counter-Strike: GO).

G-SYNC and FreeSync

Using these variable refresh rate technologies, the monitor can be updated at varying intervals so a variable frame rate will not cause stuttering since the monitor will always be in sync with the games frame rate. Blur Busters’ GSYNC Preview (Part 1 and Part 2) covers more information about G-SYNC.

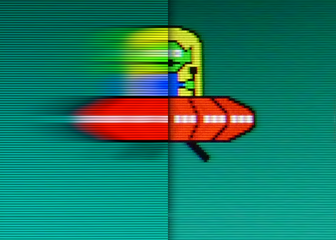

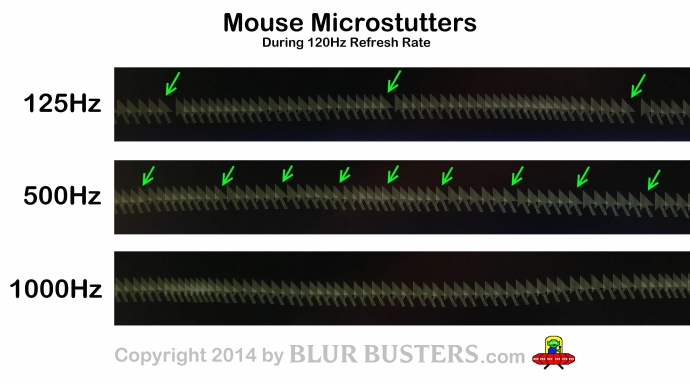

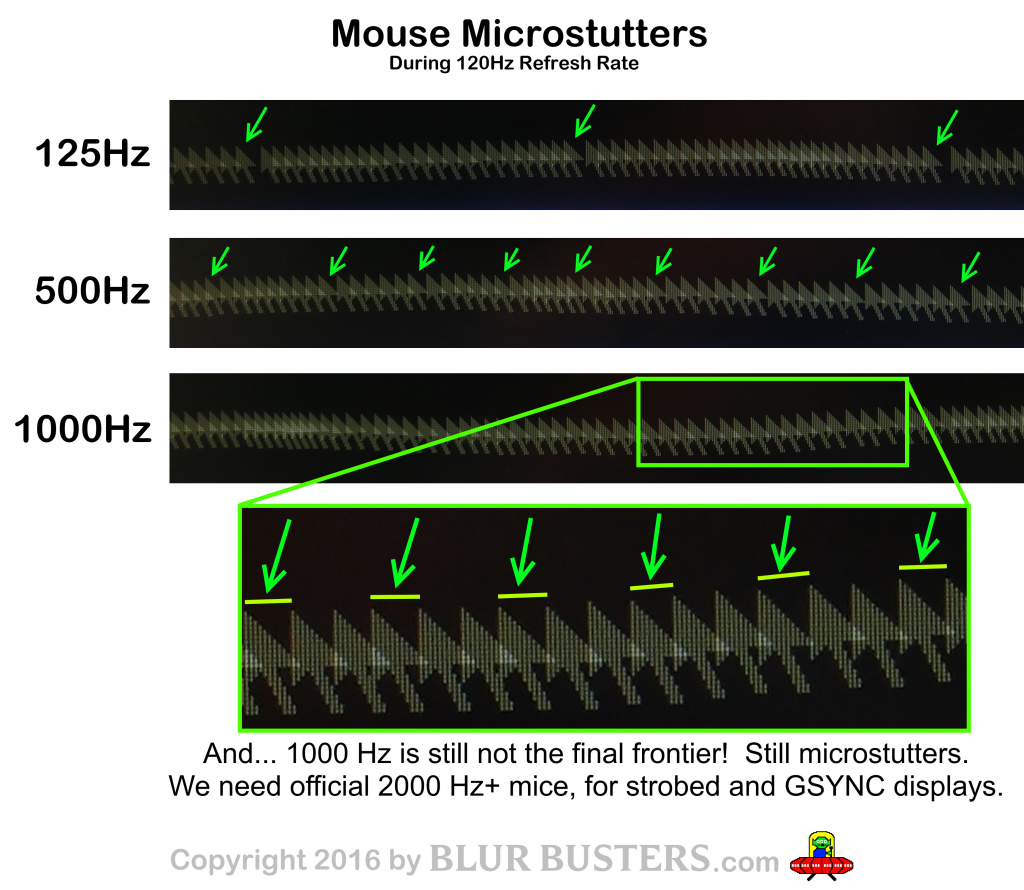

Addendum: Photos of 125Hz vs 500Hz vs 1000Hz

Originally created in a Blur Busters Forums thread, and now a part of this guide, this is a photo comparision of 125Hz versus 500Hz versus 1000Hz mouse poll rates. 500Hz versus 1000Hz is more clearly human-eye visible when enabling blur reduction strobing (e.g. LightBoost) as well as G-SYNC where NVIDIA recommends a 1000Hz mouse.

You can see this by enabling motion blur reduction on your 120Hz monitor, and then drag a text window. Fewer microstutters makes text easier to read while dragging.

The gapping effect is caused by the harmonic frequency difference (beat frequency) between frame rate and mouse poll rate. It is clearly visible when no other sources of microstutters exist; e.g. fast GPU, fast CPU, low-latency USB. This mouse microstutter is clearly visible in Source Engine games on newer GPUs at synchronized framerates.

During 125Hz mouse poll rate versus 120fps frame rate (125 MOD 120 = 5), there are 5 microstutters per second. This results in 1 gap every 25 mouse arrow positions.

During 500Hz mouse poll rate versus 120fps frame rate (500 MOD 120 = 20), there are 20 microstutters per second. This results in 1 gap every 6 mouse arrow positions.

These mouse microstutters become especially visible on low-persistence displays such as strobed monitors or CRTs, during window-dragging. 500Hz vs 1000Hz difference is amplified during LightBoost, ULMB, Turbo240, and BENQ Blur Reduction.

Microsoft Agrees 1000Hz Makes A Difference

See this Microsoft Research video on 1000Hz touchscreens. This is not for mice, but it demonstrates how surprising for humans being able to detect experiences still improving at 1000Hz.

Also, 1000Hz is NOT Final Frontier!

People are now overclocking computer mice to 2000Hz+. There are some very minor benefits, but we can confirm we notice the benefits on an ultralow-persistence monitor (e.g. ULMB).

Questions?

Discuss further about gaming mice in the Blur Busters Forums!

Ben Hansen (aka sharknice) is an indie game developer, and runs EliteOwnage.com, a website with a very detailed mouse sensitivity guide.