If you have not seen it yet, see G-SYNC Preview, Part #1 first! Next, as I continue to test G-SYNC I was faced with the question: What can we test that no other site has ever tested? Blur Busters, is not the everyday review site, having adopted some unusual testing techniques and experiments (e.g. pursuit camera patterns, creating a strobe backlight). So, I decided to successfully directly measure the input lag of the G-SYNC monitor.

What!? How Do We Measure G-SYNC Input Lag?

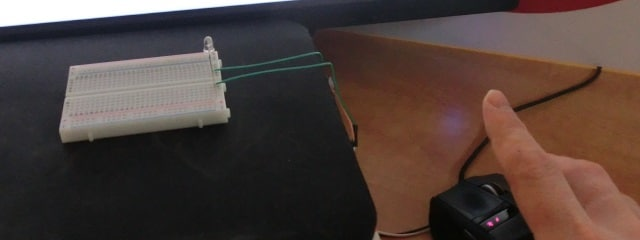

Measuring real-world input lag of G-SYNC is a very tricky endeavour. Fortunately, I invented a very accurate method of measuring real-world in-game input lag by using a high speed camera combined with a modified gaming mouse.

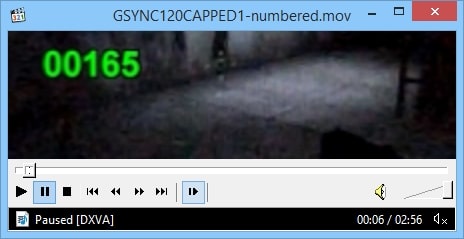

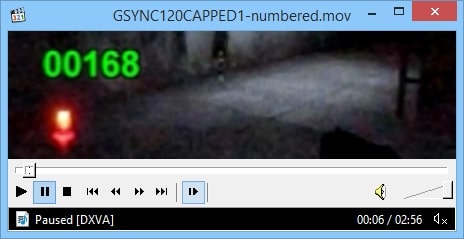

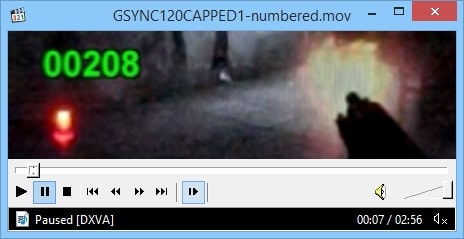

Wires from a modified Logitech G9x are attached to a LED, which illuminates instantly upon pressing the mouse button (<1ms). Using a consumer 1000fps camera, one can measure the time between the light coming from the LED, and the light coming from the on-screen gunshot, such as a gun movement, crosshairs flash, or muzzle flash:

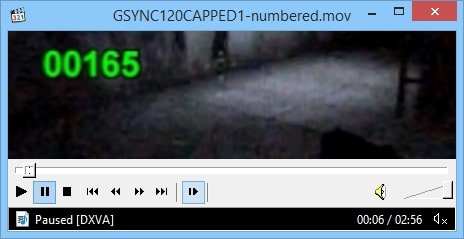

With 1000fps video, one frame is one millisecond. Conveniently, one can calculate the difference in frame numbers, between the LED illumination and the first gun reaction (whatever the first on-screen reaction is; such as a crosshairs animation, or gun moving upwards right before muzzle flash). This equals the whole-chain input lag in milliseconds, all the way from mouse, button-to-pixels! To speed up high speed analysis, I tested multiple video players to find which could do very fast forward/backward single-stepping. I found QuickTime on offline MOV files worked best (holding down K key while using J/L keys to step backwards/forwards) since YouTube unfortunately does not allow single-frame stepping. This allowed me to more rapidly obtain the input lag measurements.

This makes it possible to honestly measure the whole chain without missing any G-SYNC influences (G-SYNC effect on hardware, drivers & game software), and compute latency differences between VSYNC OFF versus G-SYNC, in a real-world, video-game situation.

With this, I set out to measure input lag of VSYNC OFF versus G-SYNC, to see whether G-SYNC lives up to NVIDIA’s input lag claims.

Quick Intro To Input Lag

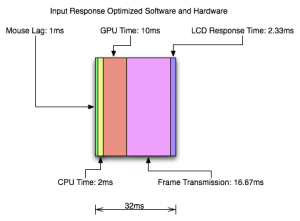

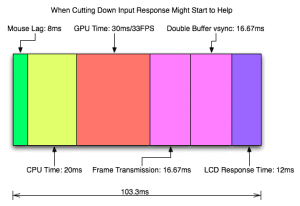

A long-time Blur Busters favorite is AnandTech’s Exploring Input Lag Inside And Out, which covers whole-chain input lag in detail, illustrating the button-to-pixels latency. This is the latency, in milliseconds, between a mouse button press, and light being emitted from pixels, seen by human eyes. This is above-and-beyond display lag, and includes several parts, such as mouse lag, CPU, GPU, frame transmission, and LCD pixel response.

Credit: AnandTech; diagrams of the whole input lag chain.

The important point is that while pixel response may be only 2ms, the whole chain input lag can be several tens of milliseconds, and even a hundred milliseconds. There are many factors that can influence input lag, including low game tick rate (e.g. Battlefield 4, which runs at a very low tick rate of 10 Hertz) as well as intentionally added latency (e.g. realistic in-game gunshot latency that more resembles a slightly random latency of a physical gun).

Now that you understand the whole-chain button-to-pixels input lag, Blur Busters presents the world’s first publicly available millisecond-accurate whole-chain input lag measurements of G-SYNC, outside of NVIDIA.

System Specifications & Test Methodology

The test system has the following specifications:

| OS | Windows 8.1 (with 1000Hz mouse fix) |

| CPU | Intel i7-3770K |

| GPU | GeForce GTX Titan (Driver 331.93) |

| RAM | 16GB (4 x 4GB) Mushkin 1600Mhz DDR3 |

| Mouse | Logitech G9x (modified) |

| Motherboard | ASUS P8Z77V-Pro |

| Monitor | ASUS VG248QE G-SYNC |

The Casio EX-ZR200 camera used, has a 1000fps high speed video feature. Although low-resolution, it is known to provide 1 millisecond frame accuracy. A high-contrast spot in the game is recorded for measurement reliability. Multiple test passes is done to average-out fluctuations, including CPU, GPU, timing variability, microstutter (early/late frames), and monitor. The screen center is measured (cross hairs, the usual human focus), as it is widely known to accurately represent average screen input lag, for all displays and all modes, including strobed, non-strobed, scanned, CRT, VSYNC OFF, VSYNC ON. The lag measurement start is LED illumination, and is accurate to 1ms. The lag measurement end is screen reaction, and is accurate to 1ms on modern TN LCDs, as the beginning of visibility in high speed video also represents the beginning of visibility to human eyes. Screen output timing variability (e.g. scan-out behavior) is also averaged-out by doing multiple passes.

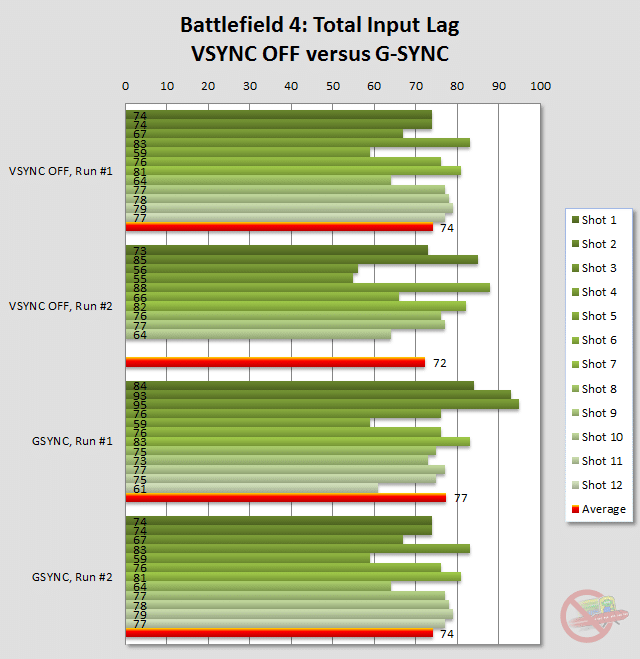

Total Input Lag of Battlefield 4

The game, Battlefield 4, is known to be extremely laggy, even on fast systems. It low 10Hz tick rate ads a huge amount of input lag, and the game rarely caps out at a monitor’s full refresh rate. Battlefield 4 is a game that typically runs at frame rates that benefits immensely from G-SYNC in eliminating erratic stutters and tearing.

I tested using maximum possible graphics settings, with AA set to 4X MSAA, running in the same location that consistently ran at 84 frames per second during VSYNC OFF and 82 frames per second during G-SYNC. Analysis of high speed videos (VSYNC OFF Run #1, Run #2, and G-SYNC Run #1, Run #2) yields this chart:

From pressing the mouse button to gunshot reaction (measured as number of 1000fps camera frames from mouse button LED illumination, to the frame with the first hint of muzzle flash), the input lag varied massively from trigger to trigger in Battlefield 4.

Note: Shot 11 and Shot 12 are missing from VSYNC OFF Run #2, as the gun started reloading. VSYNC OFF Run #2 Average is taken from 10 instead of 12 shots.

With VSYNC OFF averages of 72ms and 74ms, this is very similar to G-SYNC averages of 77ms and 74ms respectively. The variability of the averages appears to fall well below the noise floor of the high variability of Battlefield 4, so Blur Busters considers the differences in averages statistically insignificant. During game play, we were unable to feel the input lag difference between VSYNC OFF versus G-SYNC. This is good news; G-SYNC noticeably improved Battlefield 4 visual gameplay fluidity with no input lag compromise.

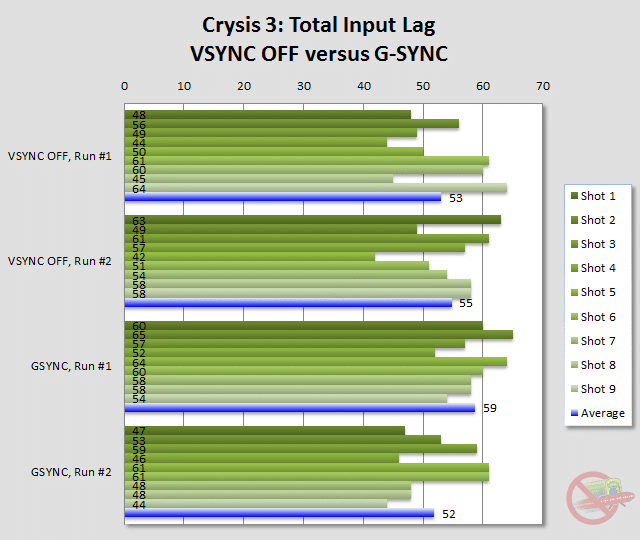

Total Input Lag of Crysis 3

From the famous “Will it run Crysis?“, the Crysis series have been a gold benchmark game for mercilessly bringing even the most powerful systems to an absolute grind, especially at maxed-out graphics settings. Armed with a GeForce GTX Titan and running at Ultra settings using 4X MSAA, we stood in a location in the game that could not crack 47 frames per second during VSYNC OFF, and 45 frames per second during G-SYNC.

High speed video analysis (VSYNC OFF Run #1, Run #2, and G-SYNC Run #1, Run #2) yields the following chart:

In high speed video, input lag is measured from mouse button LED illumination, to when the crosshair first visibly “expands” (just before the muzzle flash).

It is obviously that even during bad low-framerate situations (<50fps), Crysis 3 has a much lower total input lag than Battlefield 4. Input lag variability is almost equally massive, however, with routine 10-to-20ms variances from gunshot to gunshot. The average input lag of 53ms and 55ms for VSYNC OFF, versus averages of 59ms and 52ms for G-SYNC, is still significantly below the noise floor of the large input lag variability of the game.

It was good that we were also unable to detect any input lag degradation by using G-SYNC instead of VSYNC OFF. There were many situations where G-SYNC’s incredible ability to smooth the low 45fps frame rate, actually felt better than stuttery 75fps — this is a case where G-SYNC’s currently high price tag is justifiable, as Crysis 3 benefitted immensely from G-SYNC.

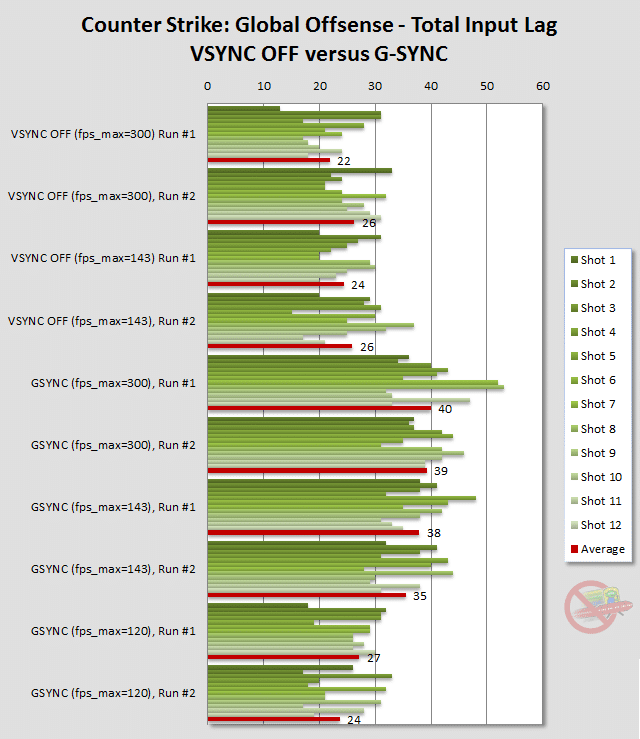

Total Input Lag of Counter Strike: Global Offensive

The older game, CS:GO, easily runs at 300 frames per second on a GeForce Titan, so this presents an excellent test case to max-out the frame rate of a G-SYNC monitor. We were curious if G-SYNC monitors started having input lag when frame rates were maxed out at the G-SYNC monitors’ maximum frame rate. We got some rather unusual results, with some very bad news immediately followed by amazingly good news!

I ran the following tests at various different “fps_max” values:

Input Lag – CS:GO – fps_max=300 – VSYNC OFF #1

Input Lag – CS:GO – fps_max=300 – VSYNC OFF #2

Input Lag – CS:GO – fps_max=143 – VSYNC OFF #1

Input Lag – CS:GO – fps_max=143 – VSYNC OFF #2

Input Lag – CS:GO – fps_max=300 – GSYNC #1

Input Lag – CS:GO – fps_max=300 – GSYNC #2

Input Lag – CS:GO – fps_max=143 – GSYNC #1

Input Lag – CS:GO – fps_max=143 – GSYNC #2

Input Lag – CS:GO – fps_max=120 – GSYNC #1

Input Lag – CS:GO – fps_max=120 – GSYNC #2

Analysis of the high speed videos result in the following very interesting chart:

In the high speed videos, I measured from mouse button LED illumination, to first upwards movement of the gun (that occurs before the muzzle flash). For all numbers in milliseconds, see PDF of spreadsheet.

As a fast-twitch game with a fairly high tick rate (Up to 128Hz, configurable), whole input lag in CS:GO is very low compared to both Battlefield 4 and Crysis 3. Sometimes, total whole-chain input lag from button-to-pixels even went below 20ms, which is quite low!

At first, it was pretty clear that G-SYNC had significantly more input lag than VSYNC OFF. It was observed that VSYNC OFF at 300fps versus 143fps had fairly insignificant differences in input lag (22ms/26ms at 300fps, versus 24ms/26ms at 143fps). When I began testing G-SYNC, it immediately became apparent that input lag suddenly spiked (40ms/39ms for 300fps cap, 38ms/35ms for 143fps cap). During fps_max=300, G-SYNC ran at only 144 frames per second, since that is the frame rate limit. The behavior felt like VSYNC ON suddenly got turned on.

The good news now comes: As a last-ditch, I lowered fps_max more significantly to 120, and got an immediate, sudden reduction in input lag (27ms/24ms for G-SYNC). I could no longer tell the difference in latency between G-SYNC and VSYNC OFF in Counterstrike: GO! Except there was no tearing, and no stutters anymore, the full benefits of G-SYNC without the lag of VSYNC ON.

Why is there less lag in CS:GO at 120fps than 143fps for G-SYNC?

We currently suspect that fps_max 143 is frequently colliding near the G-SYNC frame rate cap, possibly having something to do with NVIDIA’s technique in polling the monitor whether the monitor is ready for the next refresh. I did hear they are working on eliminating polling behavior, so that eventually G-SYNC frames can begin delivering immediately upon monitor readiness, even if it means simply waiting a fraction of a millisecond in situations where the monitor is nearly finished with its previous refresh.

I did not test other fps_max settings such as fps_max 130, fps_max 140, which might get closer to the G-SYNC cap without triggering the G-SYNC capped-out slow down behavior. Normally, G-SYNC eliminates waiting for the monitor’s next refresh interval:

G-SYNC Not Capped Out:

Input Read -> Render Frame -> Display Refresh Immediately

When G-SYNC is capped out at maximum refresh rate, the behavior is identical to VSYNC ON, where the game ends up waiting for the refresh.

G-SYNC Capped Out

Input Read -> Render Frame -> Wait For Monitor Refresh Cycle -> Display Refresh

This is still low-latency territory

Even when capped out, the total-chain input lag of 40ms is still extremely low for button-to-pixels latency. This includes game engine, drivers, CPU, GPU, cable lag, not just the display itself. Consider this: Some old displays had more input lag than this, in the display alone! (Especially HDTV displays, and some older 60Hz VA monitors).

In an extreme case scenario, photodiode oscilloscope tests show that a blank Direct3D buffer (alternating white/black), shows a 2ms to 4ms latency between Direct3D Present() and the first LCD pixels illuminating at the top edge of the screen. This covers mostly cable transmission latency and pixel transition latency. Currently, all current models of ASUS/BENQ 120Hz and 144Hz monitors are capable of zero-buffered real-time scanout, resulting in sub-frame latencies (including in G-SYNC mode).

Game Developer Recommendations

It is highly recommended that Game Options include a fully adjustable frame-rate capping capability, with the ability to turn it on/off. The gold standard is the fps_max setting found in the Source Engine, which throttles a game’s frame rate to a specific maximum.

These frame rate limiters hugely benefit G-SYNC because the game now controls the timing of monitor refreshes during G-SYNC. By allowing users to configure a frame rate cap somewhat below G-SYNC’s maximum refresh rate, the monitor can begin scanning the refresh immediately after rendering, with no waiting for blanking interval.

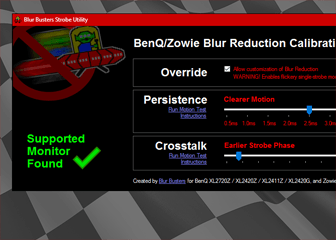

What about LightBoost – Ultra Low Motion Blur?

The cat is now out of the bag by many sources, and several news releases, so Blur Busters comments on the LightBoost sequel that’s included in all G-SYNC monitors!

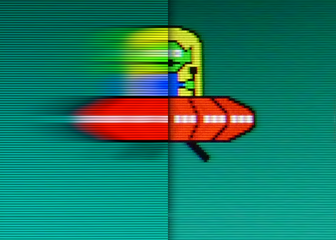

All G-SYNC monitors include a LightBoost sequel called ULMB, which stands for Ultra Low Motion Blur. This is activated by a button on the monitor, activatable during 85Hz/100Hz/120Hz mode, and eliminates most motion blur, in the same manner as LightBoost. ULMB is a motion blur reduction strobe backlight (high speed video) to lower persistence, by flashing the backlight only on fully refreshed frames (and almost completely bypassing GtG pixel transitions on LCD).

For those who do not yet understand strobing fully, the educational TestUFO animations, Black Frame Strobing Animation and Eye Tracking Motion Blur (Persistence) Animation, helps explain the principle of modern strobe backlights in gaming monitors such as LightBoost, ULMB, Turbo240, or BENQ’s Blur Reduction. Also see Photos: 60Hz versus 120z versus LightBoost.

More tests for ULMB are coming in a future article. However, that said, I provide the preliminary Blur Busters impressions:

- No Utility Needed

You only need to press a button on the monitor to turn ON/OFF the ULMB strobing. Very easy to turn on/off at any time, even at the Desktop or while inside a game, as long as you’re running in non-GSYNC mode. - Color quality is significantly better than VG248QE LightBoost.

There is no reddish or crimson tinting. Although the picture is not as colorful as some higher end strobe backlight monitors (e.g. EIZO FG2421 with Turbo240 strobing), the picture is much better than VG248QE LightBoost. The contrast ratio is less than non-LightBoost but slightly better than LightBoost. Brightness is same as LightBoost=100%. - Colors are adjustable, and works in games.

You can finally recalibrate “LightBoost” colors via the monitor itself, via a utility. There is now a HOWTO: Adjust Colors on VG248QE G-SYNC. - Fewer LCD Inversion and banding artifacts.

There are less artifacts visible at www.testufo.com/inversion than on all other LightBoost monitors that I have seen. Even the TestUFO Flicker Test (full screen) look much cleaner and more uniform. - Strobe Length Not Adjustable

Many readers have asked if motion clarity can be adjusted (similiar to LightBoost 10% versus 50% versus 100%). Unfortunately, persistence is not currently adjustable.

Note: Blur Busters has informed NVIDIA to recommend them to add an adjustment (e.g. DDC/CI command) to add strobe length adjustability for the strobe backlight in future G-SYNC monitors, as a trade-off between brightness versus motion clarity. - LCD Contrast Ratio Still Lower in Strobe Mode

The contrast ratio remains approximately the same as LightBoost on the same panel. Currently, 27″ panels have less contrast ratio degradation for strobed mode than with current 24″ 1ms panels. Some of us prefer the full contrast range, even at the expense of slightly more overdrive artifacts. It is observed one old LightBoost monitor (the ASUS VG278H, original H suffix, not HE suffix, when configured via OSD Contrast 90-94%) is one of the few models able to achieve nearly 1000:1 contrast ratio in LightBoost mode with some ghosting trade offs. In contrary, the 24″ panel is only able to barely exceed 500:1 contrast ratio in comparison. However, that being said, VG248QE ULMB is still much better looking than VG248QE LightBoost.

Note: Blur Busters has informed NVIDIA to further investigate this issue, to see if there are ways to decrease the contrast ratio difference between strobed and non-strobed in future monitors.

Should I use G-SYNC or ULMB?

Currently, G-SYNC and ULMB is a mutually-exclusive choice – you cannot use both simultaneously (yet), since it is a huge engineering challenge to combine the two.

G-SYNC: Eliminates stutters, tearing and reduces lag, but not motion blur.

LightBoost/ULMB: Eliminates motion blur, but not stutters or tearing.

Motion blur eliminating strobe backlights (LightBoost or ULMB) always looks best when strobe rate matches frame rate. Such strobe backlights tend to run at high refresh rates only, in order to avoid flicker (to avoid eyestrain-inducing 60Hz style CRT flicker).

We found that G-SYNC looked nicer at the low frame rates experienced in both Battlefield 4 and Crysis 3, while ULMB looked very nice during Counter Strike: GO. We did not yet do extensive tests on input lag, but preliminary checks shows that ULMB adds only approximately 4ms (center/average) input lag compared to VSYNC OFF or good frame-capped G-SYNC. If you do, however, use ULMB, and you prefer not to fully lock the frame rate to refresh rate, then using a close frame rate works well (e.g. fps_max 118) as a latency compromise, if you prefer the motion clarity of ULMB.

It is a personal preference whether to use G-SYNC or ULMB. As a rule of thumb:

G-SYNC: Enhances motion quality of lower & stuttery frame rates.

LightBoost/ULMB: Enhances motion quality of higher & consistent frame rates.

Yes, the G-SYNC upgrade kit includes ULMB. ULMB works in multiple monitor mode (much more easily than LightBoost) even if G-SYNC can only work on one monitor at a time. Currently, G-SYNC only works on the primary monitor at this time, with current NVIDIA drivers.

Conclusion

As even the input lag in CS:GO was solvable, I found no perceptible input lag disadvantage to G-SYNC relative to VSYNC OFF, even in older source engine games, provided the games were configured correctly (NVIDIA Control Panel configured correctly to use G-SYNC, and game configuration updated correctly). G-SYNC gives the game player a license to use higher graphics settings in the game, while keeping the gameplay smooth.

We are very glad that manufacturers are paying serious attention to strobe backlights now, ever since this has been Blur Busters raison d’être (ever since our domain name used to be www.scanningbacklight.com in 2012, during the Arduino Scanning Backlight Project).