How Low Should You Go?

Blur Busters was the world’s first site to test G-SYNC in Preview of NVIDIA G-SYNC, Part #1 (Fluidity) using an ASUS VG248QE pre-installed with a G-SYNC upgrade kit. At the time, the consensus was limiting the fps from 135 to 138 at 144Hz was enough to avoid V-SYNC-level input lag.

However, much has changed since the first G-SYNC upgrade kit was released; the Minimum Refresh Range wasn’t in place, the V-SYNC toggle had yet to be exposed, G-SYNC did not support borderless or windowed mode, and there was even a small performance penalty on the Kepler architecture at the time (Maxwell and later corrected this).

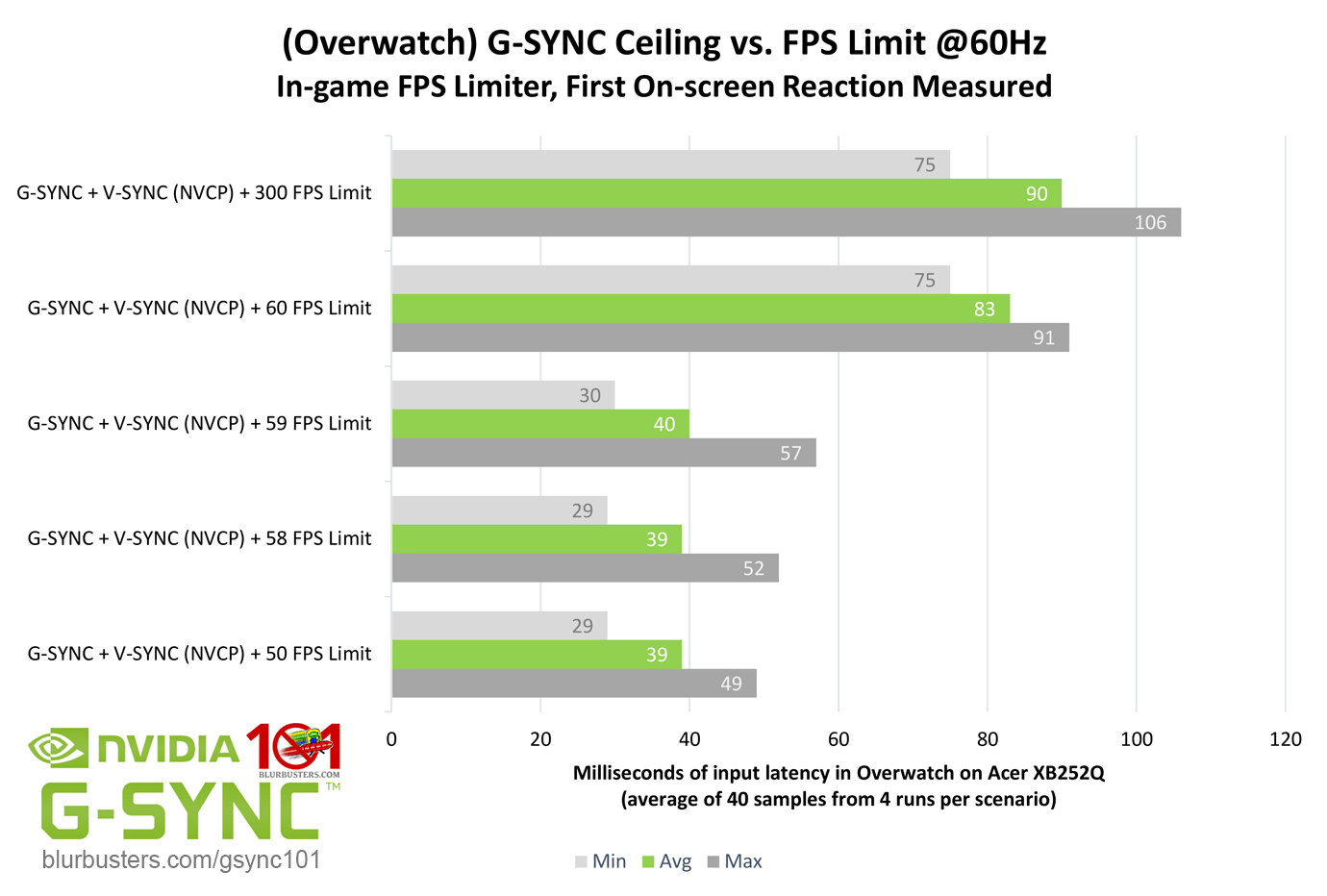

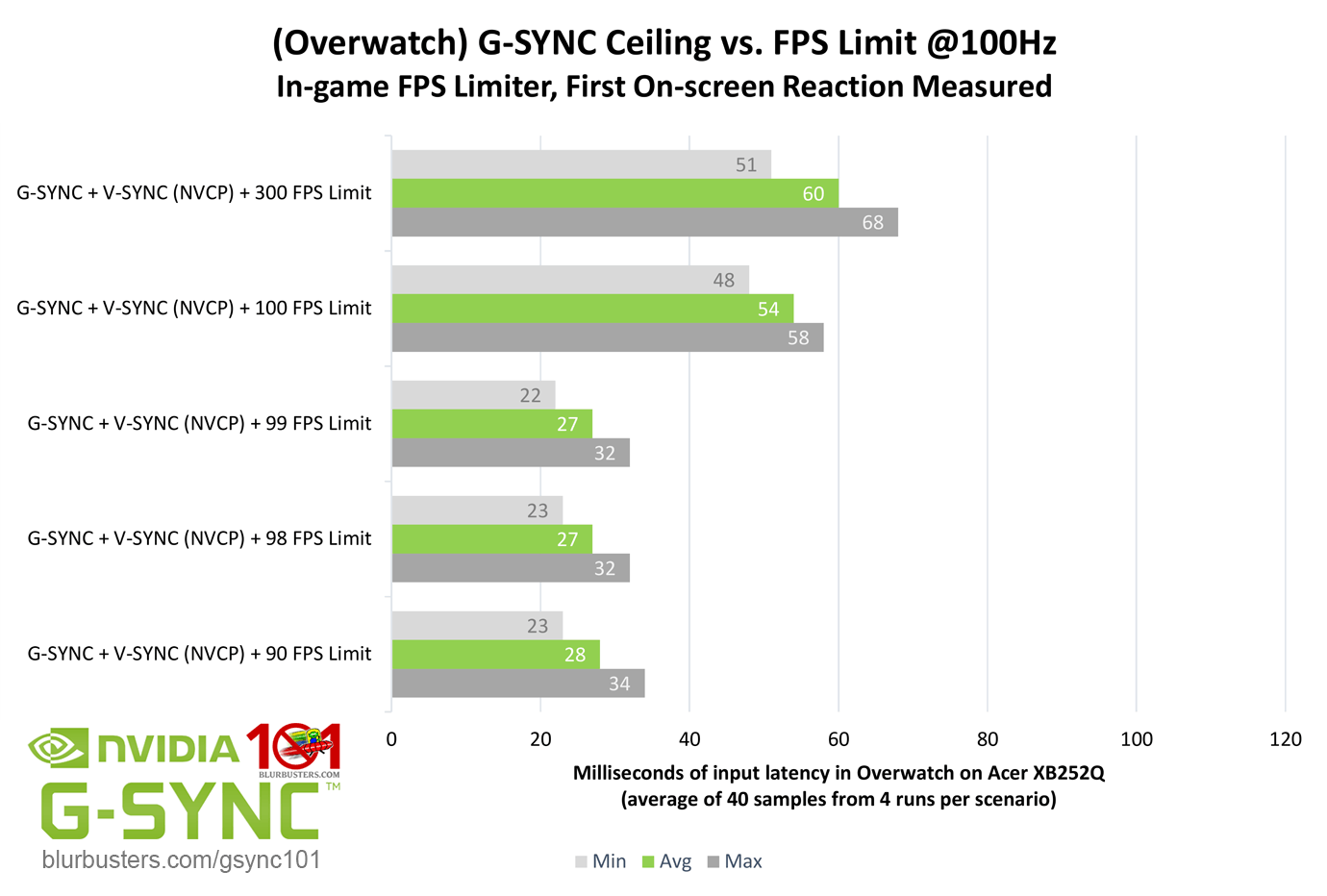

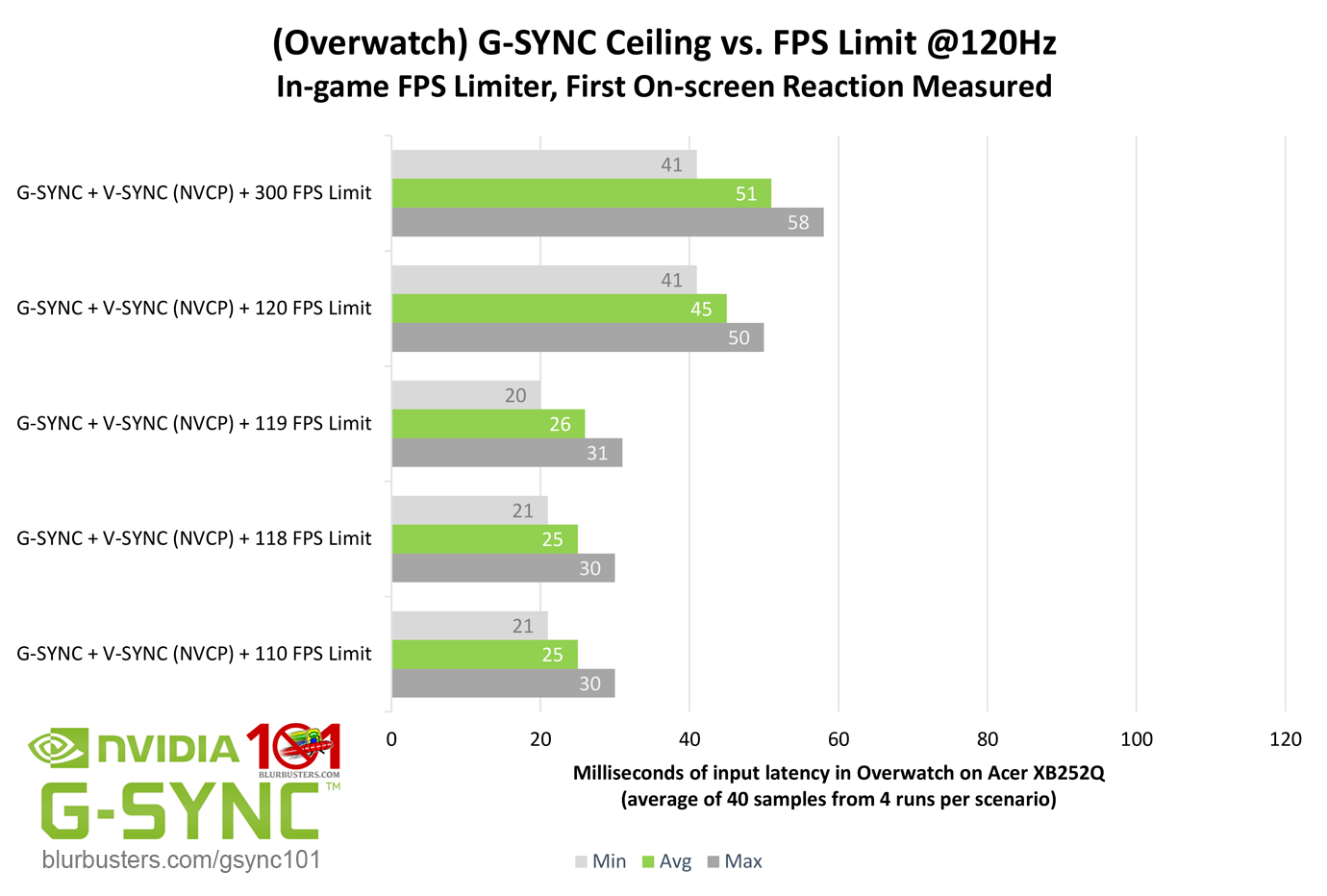

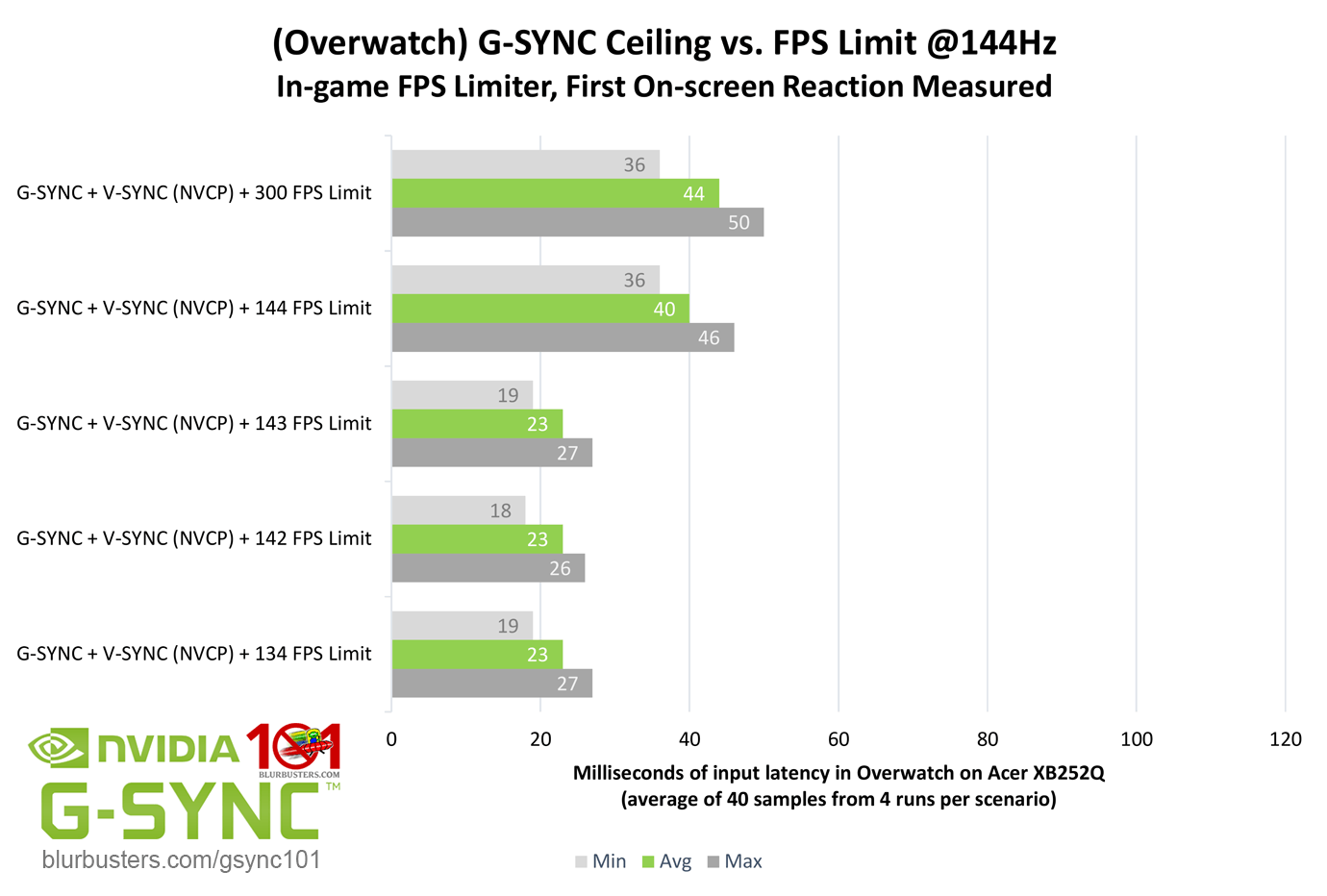

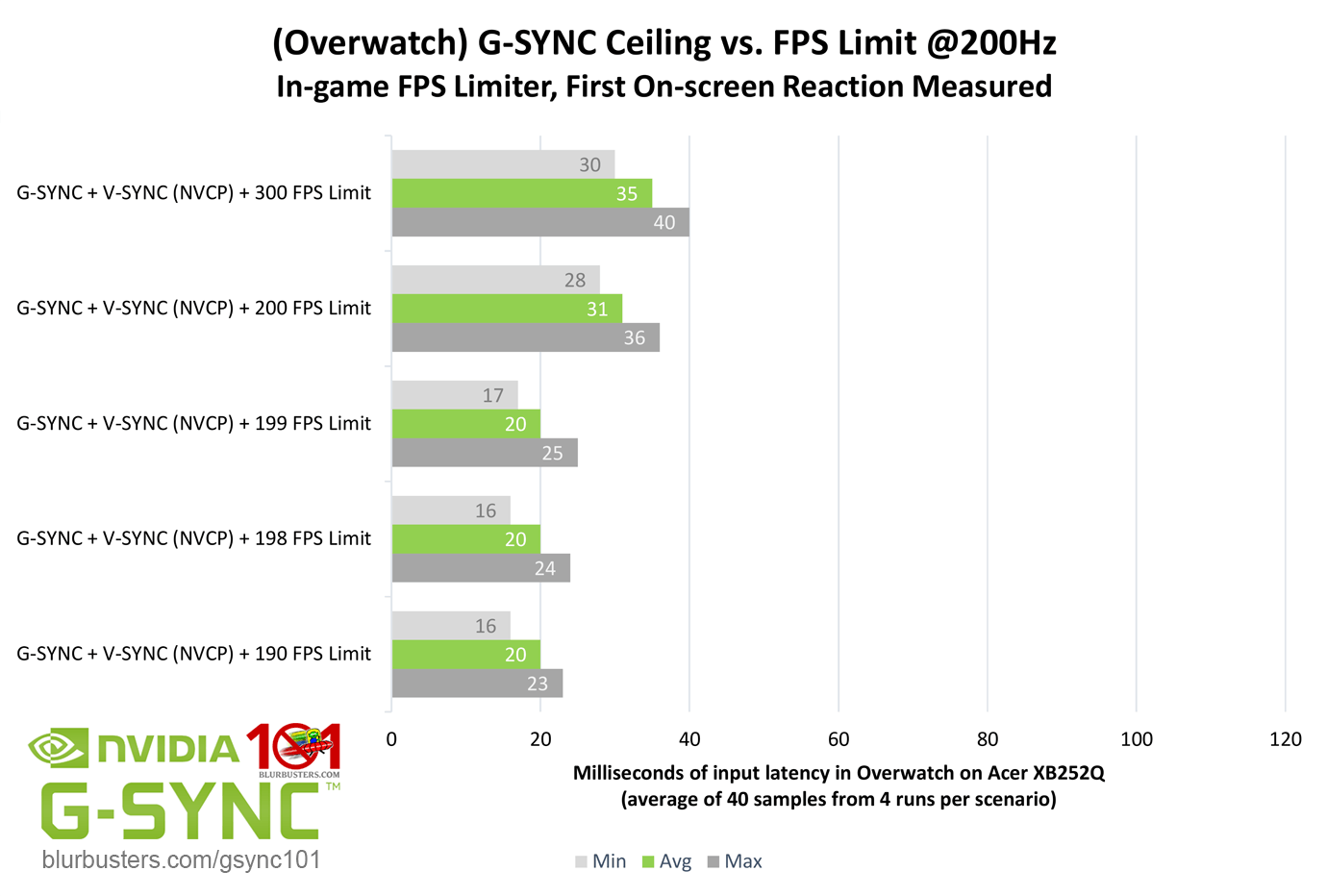

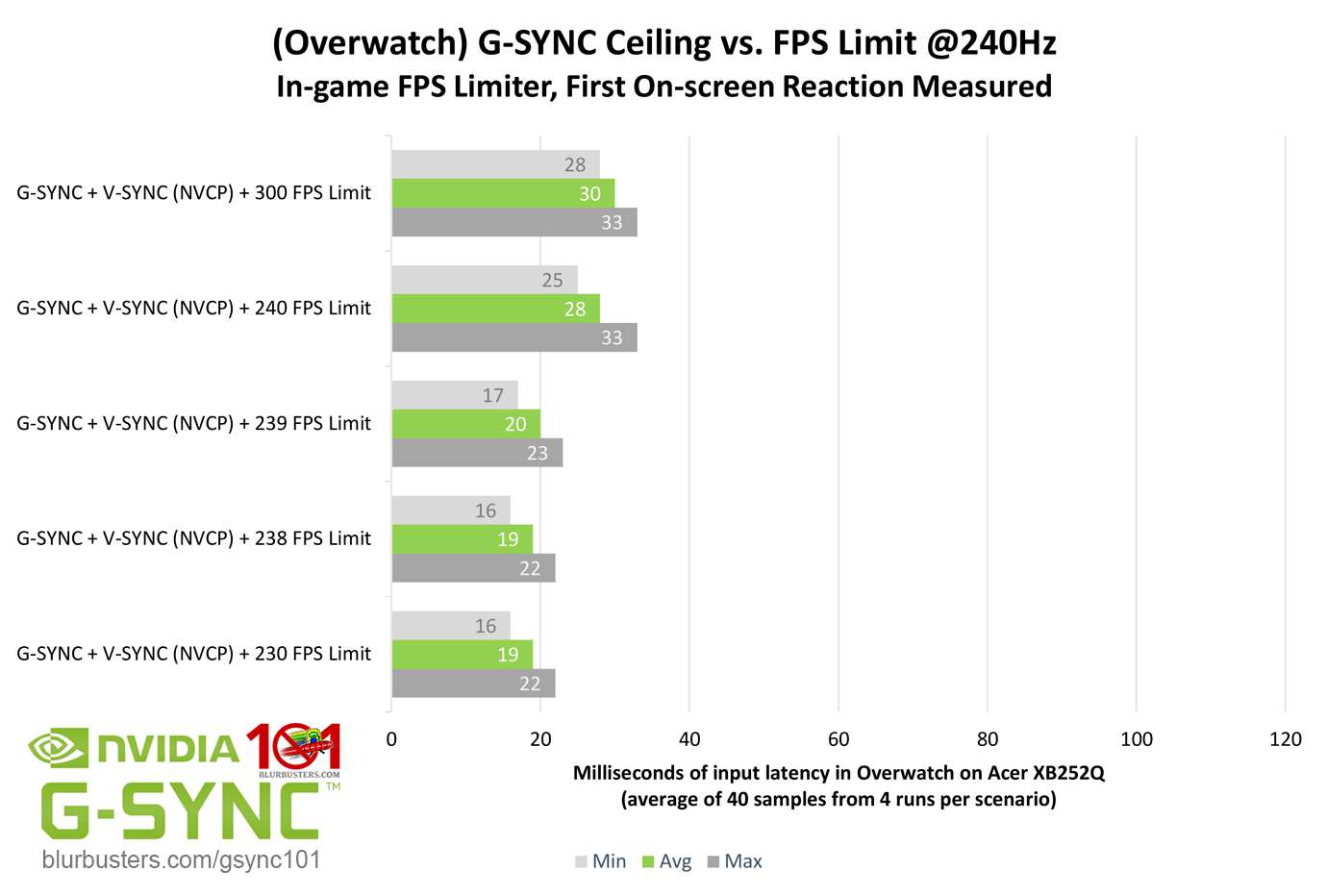

My own testing in my Blur Busters Forum thread found that just 2 FPS below the refresh rate was enough to avoid the G-SYNC ceiling. However, now armed with improved testing methods and equipment, is this still the case, and does the required FPS limit change depending on the refresh rate?

As the results show, just 2 FPS below the refresh rate is indeed still enough to avoid the G-SYNC ceiling and prevent V-SYNC-level input lag, and this number does not change, regardless of the maximum refresh rate in use.

To leave no stone unturned, an “at” FPS, -1 FPS, -2 FPS, and finally -10 FPS limit was tested to prove that even far below -2 FPS, no real improvements can be had. In fact, limiting the FPS lower than needed can actually slightly increase input lag, especially at lower refresh rates, since frametimes quickly become higher, and thus frame delivery becomes slower due to the decrease in sustained framerates.

As for the “perfect” number, going by the results, and taking into consideration variances in accuracy from FPS limiter to FPS limiter, along with differences in performance from system to system, a -3 FPS limit is the safest bet, and is my new recommendation. A lower FPS limit, at least for the purpose of avoiding the G-SYNC ceiling, will simply rob frames.